An algorithm applied to over 200 million patients is more likely to recommend extra health care for relatively healthy white patients over sicker black patients (paper in Science and news coverage). Russia was found to be running influence operations in 6 African countries via 73 Facebook pages, many of which purported to be local news sources, and which also spanned WhatsApp and Telegram (paper from Stanfod Internet Observatory and news coverage). An Indigenous elder revealed that the Indigenous consultation that SideWalk Labs (an Alphabet/Google company) conducted was “hollow and tokenistic”, with zero of the 14 recommendations that arose from the consultation included in SideWalks Labs’ 1,500 page report, even though the report mentions the Indigenous consultation many times. All these stories occurred just in the last week, the same week during which former chairman of the Alphabet board Eric Schmidt complained that people “don’t need to yell” about bias. Issues of data misuse, including bias, surveillance, and disinformation continue to be urgent and pervasive. For those of you living in the SF Bay Area, the Tech Policy Workshop (register here) being hosted Nov 16-17 by the USF Center for Applied Data Ethics (CADE) will be an excellent opportunity to learn and engage around these issues (the sessions will be recorded and shared online later). And for those of you living elsewhere, we have several videos to watch and news articles to read now!

Events to attend

Exploratorium After Dark: Matters of Fact

I’m going to be speaking at the Exploratorium After Dark (an adults-only evening program) on Thurs, Nov 7, on Disinformation: The Threat We Are Facing is Bigger Than Just “Fake News”. The event is from 6-10pm (my talk is at 8:30pm) and a lot of the other exhibits sound fascinating. Details and tickets here.

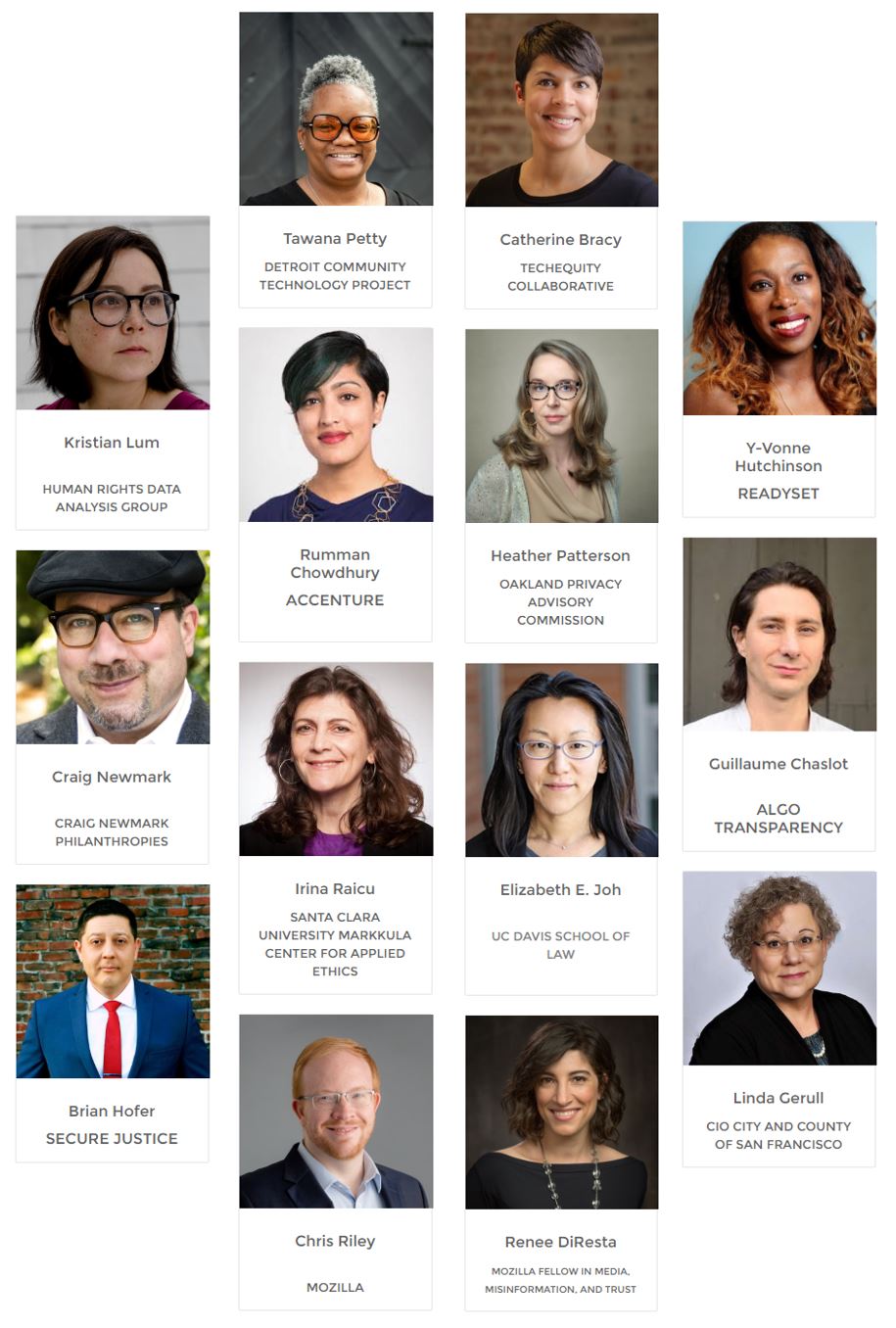

Great speaker line-up for our Nov 16-17 Tech Policy Workshop in SF

Systemic problems, such as increasing surveillance, spread of disinformation, concerning uses of predictive policing, and the magnification of unjust bias, all require systemic solutions. We hope to facilitate collaborations between those in tech and in policy, as well as highlight the need for policy interventions in addressing ethical issues to those working in tech.

USF Center for Applied Data Ethics Tech Policy Workshop

Dates: Nov 16-17, 9am-5:30pm

Location: McLaren Conference Center, 2130 Fulton Street, San Francisco, CA 94117

Breakfast, lunch, and snack included

Details: https://www.sfdatainstitute.org/

Register here

Anyone interested in the impact of data misuse on society & the intersection with policy is welcome!

Info Session about our spring Data Ethics Course

I will be teaching an Intro to Data Ethics course downtown on Monday evenings, from Jan 27 to March 9 (with no class Feb 17). The course is intended for working professionals. Come find out more at an info session on Nov 12.

Videos of our Data Ethics Seminars

We had 3 fantastic speakers for our Data Ethics Seminar this fall: Deborah Raji, Ali Alkhatib, and Brian Brackeen.

Deborah Raji gave a powerful inaugural seminar, opening with the line “There is an urgency to AI ethics & accountability work, because there are currently real people being affected.” Unfortunately, what it means to do machine learning that matters in the real world is different than what academia incentivizes. Using her work with Joy Buolamwini on GenderShades as a case study, she shared how research can be designed with the specific goal of having a concrete impact. GenderShades has been cited in a number of lawsuits, bans, federal bills, and state bills around the use of facial recognition.

Ali Alkhatib gave an excellent seminar on using lenses and frameworks originating in the social sciences to understand problems situated in technology, “Everything we do is situated within cultural & historical backdrops. If we’re serious about ethics & justice, we need to be serious about understanding those histories”

Brian Brackeen shared his experience founding a facial recognition start-up, the issues of racial bias in facial recogniton, and his current work funding under-represented founders in tech. After his talk, we had a fire-side chat and a lively Q&A session. Unfortunately, due to a mix-up with the videographer, we do not have a recording of his talk. I encourage you to follow Brian on twitter, read his powerful TechCrunch op-ed on why he refused to sell facial recognition to law enforcement, and watch this previous panel he was on.

All of these events were open to the public, and we will be hosting more seminars in the spring. To keep up on events, please join our email list:

And finally, I want to share a recent talk I gave on “Getting Specific About Algorithmic Bias.” Through a series of case studies, I illustrate how different types of bias have different sources (and require different approaches to mitigate the bias), debunk several misconceptions about bias, and share some steps towards solutions.

Apply to our data ethics fellowships

We are offering full-time fellowships for those working on problems of applied data ethics, with a particular focus on work that has a direct, practical impact. Applications will be reviewed after November 1, 2019 with roles starting in January or June 2020. We welcome applicants from any discipline (including, but not limited to computer science, statistics, law, social sciences, history, media studies, political science, public policy, business, etc.). We are looking for people who have shown deep interest and expertise in areas related to data ethics, including disinformation, surveillance/privacy, unjust bias, or tech policy, with a particular focus on practical impact. Here are the job postings:

- Applied Data Ethics Research Fellow (graduate degree required)

- Applied Data Ethics Fellow (no graduate degree)

CADE around the web

News articles: - The Unsettling Rise of the Urban Narc App (CityLab) - ‘Deepfakes,’ deep pockets: Facebook spends $10 million on contest for detecting ‘constantly evolving’ videos (Washington Post) - You’ve been warned: Full body deepfakes are the next step in AI-based human mimicry (Fast Company) - How to operationalize AI ethics (Venture Beat) - Google Says Google Translate Can’t Replace Human Translators. Immigration Officials Have Used It to Vet Refugees. (ProPublica) - Hospital Algorithms Are Biased Against Black Patients, New Research Shows (Medium OneZero)

Blog Posts and talks: - 8 Things You Need to Know about Surveillance (fast.ai blog) - The problem with metrics is a big problem for AI (fast.ai blog) - Putting Algorithms in the Org Chart (panel at the Churchill Club) - Getting Specific About Algorithmic Bias (PyBay)

Join the CADE mailing list: