What is the ethical responsibility of data scientists?

What we’re talking about is a cataclysmic change… What we’re talking about is a major foreign power with sophistication and ability to involve themselves in a presidential election and sow conflict and discontent all over this country… You bear this responsibility. You’ve created these platforms. And now they are being misused, Senator Feinstein said this week in a senate hearing. Who has created a cataclysmic change? Who bears this large responsibility? She was talking to executives at tech companies and referring to the work of data scientists.

Data science can have a devastating impact on our world, as illustrated by inflammatory Russian propaganda being shown on Facebook to 126 million Americans leading up to the 2016 election (and the subject of the senate hearing described above) or by lies spread via Facebook that are fueling ethnic cleansing in Myanmar. Over half a million Rohinyga have been driven from their homes due to systematic murder, rape, and burning. Data science is foundational to Facebook’s newsfeed, in determining what content is prioritized and who sees what.

As we data scientists sit behind computer screens coding, we may not give much thought to the people whose lives may be changed by our algorithms. However, we have a moral responsiblity to our world and to those whose lives will be impacted by our work. Technology is inherently about humans, and it is perilous to ignore human psychology, sociology, and history while creating tech. Even aside from our ethical responsibility, you could serve time in prison for the code you write, like the Volkswagon engineer who was sentenced to 3.5 years in prison for helping develop software to cheat on federal emissions tests. This is what his employer asked him to do, but following your boss’s orders doesn’t absolve you of responsibility and is not an excuse that will protect you in court.

As a data scientist, you may not have too much say in product decisions, but you can ask questions and raise issues. While it can be uncomfortable to stand up for what is right, you are in a fortunate position as part of only 0.3-0.5% of the global population who knows how to code. With this knowledge comes a responsibility to use it for good. There are many reasons why you may feel trapped in your job (needing a visa, supporting a family, being new to the industry); however, I have found that people in unethical or toxic work environments (my past self included) consistently underestimate their options. If you find yourself in an unethical environment, please at least attempt applying for other jobs. The demand for data scientists is high and if you are currently working as a data scientist, there are most likely other companies that would like to hire you.

Unintended consequences

One thing we should all be doing is thinking about how bad actors could misuse our technology. Here are a few key areas to consider:

- How could trolls use your service to harass vulnerable people?

- How could an authoritarian government use your work for surveillance? (here are some scary surveillance tools)

- How could your work be used to spread harmful misinformation or propaganda?

- What safeguards could be put in place to mitigate the above?

Data Science Impacts the World

The consequences of algorithms can be not only dangerous, but even deadly. Facebook is currently being used to spread dehumanizing misinformation about the Rohingya, an ethnic minority in Myanmar. As described above, over half a million Rohinyga have been driven from their homes due to systematic murder, rape, and burning. For many in Myanmar, Facebook is their only news source. As quoted in the New York Times, one local official of a village with numerous restrictions prohibiting Muslims (the Rohingya are Muslim while the majority of the country is Buddhist) admits that he has never met a Muslim, but says [they] are not welcome here because they are violent and they multiply like crazy with so many wives and children. I have to thank Facebook, because it is giving me the true information in Myanmar.

Abe Gong, CEO of Superconductive Health, discusses a criminal recidivism algorithm used in U.S. courtrooms that included data about whether a person’s parents separated and if their father had ever been arrested. To be clear, this means that people’s prisons sentences were longer or shorter depending on things their parents had done. Even if this increased the accuracy of the model, it is unethical to include this information, as it is completely beyond the control of the defendants. This is an example of why data scientists shouldn’t just unthinkingly optimize for a simple metric, but that we must also think about what type of society we want to live in.

Runaway Feedback Loops

Evan Estola, lead machine learning engineer at Meetup, discussed the example of men expressing more interest than women in tech meetups. Meetup’s algorithm could recommend fewer tech meetups to women, and as a result, fewer women would find out about and attend tech meetups, which could cause the algorithm to suggest even fewer tech meetups to women, and so on in a self-reinforcing feedback loop. Evan and his team made the ethical decision for their recommendation algorithm to not create such a feedback loop. It is encouraging to see a company not just unthinkingly optimize a metric, but to consider their impact.

While Meetup chose to avoid such an outcome, Facebook provides an example of allowing a runaway feedback loop to run wild. Facebook radicalizes users interested in one conspiracy theory by introducing them to more. As Renee DiResta, a researcher on proliferation of disinformation, writes, once people join a single conspiracy-minded [Facebook] group, they are algorithmically routed to a plethora of others. Join an anti-vaccine group, and your suggestions will include anti-GMO, chemtrail watch, flat Earther (yes, really), and ‘curing cancer naturally’ groups. Rather than pulling a user out of the rabbit hole, the recommendation engine pushes them further in.

Yet another example is a predictive policing algorithm that predicts more crime in certain neighborhoods, causing more police officers to be sent to those neighborhoods, which can result in more crime being recorded in those neighborhoods, and so on. Computer science research on Runaway Feedback Loops in Predictive Policing illustrates how this phenomenon arises and how it can be prevented.

Myths: “This is a neutral platform”, “How users use my tech isn’t my fault”, “Algorithms are impartial”

As someone outside the tech industry but who sees a lot of brand new tech, actor Kumail Nanjiani of the show Silicon Valley provides a helpful perspective. He recently tweeted that he and other cast members are often shown tech that scares them with its potential for misuse. Nanjiani writes, And we’ll bring up our concerns to them. We are realizing that ZERO consideration seems to be given to the ethical implications of tech. They don’t even have a pat rehearsed answer. They are shocked at being asked. Which means nobody is asking those questions. “We’re not making it for that reason but the way ppl choose to use it isn’t our fault. Safeguards will develop.” But tech is moving so fast. That there is no way humanity or laws can keep up… Only “Can we do this?” Never “should we do this?”

A common defense in response to calls for stronger ethics or accountability is for technologists such as Mark Zuckerberg to say that they are building neutral platforms. This defense doesn’t hold up, because any technology requires a number of decisions to be made. In the case of Facebook, decisions such as what to prioritize in the newsfeed, what metrics (such as ad revenue) to optimize for, what tools and filters to make available to advertisers vs users, and the firing of human editors have all influenced the product (as well as the political situation of many countries). Sociology professor Zeynep Tufecki argued in the New York Times that Facebook selling ads targeted to “Jew haters” was not a one-off failure, but rather an unsurprising outcome from how the platform is structured.

Others claim that they can not act to curb online harassment or hate speech as that would contradict the principle of free speech. Anil Dash, CEO of Fog Creek software, writes, “the net effect of online abuse is to silence members of [under-represented] communities. Allowing abuse hurts free speech. Communities that allow abusers to dominate conversation don’t just silence marginalized people, they also drive away any reasonable or thoughtful person who’s put off by that hostile environment.” All tech companies are making decisions about who to include their communities, whether it is through action or implicitly through inaction. Valerie Aurora debunks similar arguments in a post on the paradox of tolerance explaining how free speech can be reduced overall when certain groups are silenced and intimidated. Choosing not to take action about abuse and harassment is still a decision, and it’s a decision that will have a large influence on who uses your platform.

Some data scientists may see themselves as impartially analyzing data. However, as iRobot director of data science Angela Bassett said, “It’s not that data can be biased. Data is biased.” Know how your data was generated and what biases it may contain. We are encoding and even amplifying societal biases in the algorithms we create. In a recent interview with Wired, Kate Crawford, co-founder of the AI Now Institute and principal researcher at Microsoft, explains that data is not neutral, data can not be neutralized, and “data will always bear the marks of its history.” We need to understand that history and what it means for the systems we build.

Bias

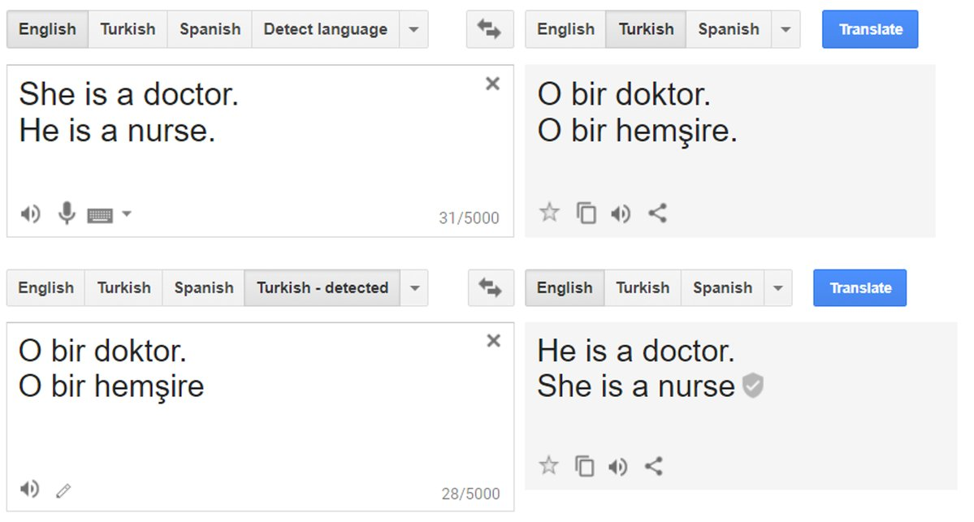

Although I am tired of writing and speaking about bias, I will continue to do so, as it seems that many haven’t gotten the message. I was dismayed to see influential machine learning professor Pedro Domingos recently tweet that machine learning algorithms don’t discriminate, and I’m horrified now to see that his tweet garnered 30 retweets and 95 likes. The examples of bias in data science are myriad and include: * Google Photos automatically labeling Black people as “gorillas” * Software to assesses criminal recidivism risk that is twice as likely to mistakenly predict that Black defendants are high risk * Google’s popular Word2Vec language library creating sexist analogies such as man→computer programmer :: woman→homemaker. * Neural networks learning that “hotness” is having light skin * An app to compare job candidates’ word choice, tone, and facial movements with current employees, which Princeton Professor Arvind Narayanan described as AI whose only conceivable purpose is to perpetuate societal biases * Google Translate converting gender neutral sentences to “He is a doctor. She is a nurse”

These biased outcomes arise for a number of reasons, including biased data sets and lack of diversity in the teams building the products. Using a held-out test set and avoiding overfitting is not just good practice, but also an ethical imperative. Overfitting often means that the error rates are higher on types of data that are not well-represented in the training set, quite literally under-represented or minority data.

Your Responsibility in Hiring

We will continue to see mistakes like those listed above until we have more diverse teams building our technology. If you are invovled in interviewing candidates or weighing in on hiring decisions, you have a responsibility to work towards a less biased hiring process. Note that seeing yourself as “gender-blind” or “color-blind” doesn’t work, and perceiving yourself as objective makes you even more biased. Companies must start doing more than just paying empty lip service to diversity.

I’ve done extensive research on retaining women at your company and on bias in interviews, including practical tips to address both. Stripe Engineer Julia Evans thought she could do a better job at conducting phone interviews, so she created a rubric for evaluating candidates for herself, which was eventually adopted as a company-wide standard. She wrote an excellent post about Making Small Culture Changes that should be helpful regardless of what role you are in.

Systemic & Regulatory Response

This blog post is written with an audience of individual data scientists in mind, but systemic and regulatory responses are necessary as well. Renee DiResta draws an analogy between the advent of high frequency trading in the financial markets and the rise of bots and misinformation campaigns on social networks. She argues that just as regulations were needed for the financial markets to combat increasing fragility and bad actors, regulations are needed for social networks to combat increasing fragility and bad actors. Kate Crawford points out that there is a large gap between proposed ethical guidelines and what is happening in practice, because we don’t have accountability mechanisms in place.

Further Resources

The topic of ethics in data science is too huge and complicated to be thoroughly covered in a single blog post. I encourage you to do more reading on this topic and to discuss it with your co-workers and peers. Here are some resources to learn more:

- List of Readings from Ethics for CS & EE course at Oregon Health & Science University

- List of Readings from Ethics and Policy in Data Science course at Cornell University

- List of Readings from Information Ethics & Policy course at University of Colorado Boulder

- AI Now Institute at NYU which examines the social implications of AI. Check out their AI Now 2017 Report

- Fairness, Accountability, and Transparency in Machine Learning organization, including their list of relevant scholarship

- Readings from Data & Society Research Institute

- Critical Algorithm Studies: a Reading List from the Social Media Collective

- Selected publications from PERVADE project on data ethics for computational research

- Compilation of articles on bias in machine learning created by Data Science Renee

- The Immortal Myths About Online Abuse by Anil Dash

- My workshop on Word Embeddings, Bias in ML, Why You Don’t Like Math, & Why AI Needs You

- My keynote at QCon.ai Analyzing & Preventing Unconscious Bias in Machine Learning

- My post on Facebook’s lackluster response to its role in the genocide in Myanmar (especially compared to its response to a potential financial penalty in Germany), what concrete regulations could help us, the continued push of Facebook lobbyists to gut the few privacy laws we have, and the role that Free Basics (aka Internet.org) has played in global hate speech and violence.

Your Responsibility

You can do awesome and meaningful things with data science (such as diagnosing cancer, stopping deforestation, increasing farm yields, and helping patients with Parkinson’s disease), and you can (often unintentionally) enable terrible things with data science, as the examples in this post illustrate. Being a data scientist entails both great opportunity, as well as great responsibility, to use our skills to not make the world a worse place. Ultimately, doing data science is about humans, not just the users of our products, but everyone who will be impacted by our work.