Summary

Today fast.ai is releasing v1 of a new free open source library for deep learning, called fastai. The library sits on top of PyTorch v1 (released today in preview), and provides a single consistent API to the most important deep learning applications and data types. fast.ai’s recent research breakthroughs are embedded in the software, resulting in significantly improved accuracy and speed over other deep learning libraries, whilst requiring dramatically less code. You can download it today from conda, pip, or GitHub or use it on Google Cloud Platform. AWS support is coming soon.

About fast.ai

fast.ai’s mission is to make the power of state of the art deep learning available to anyone. In order to make that happen, we do three things:

Research how to apply state of the art deep learning to practical problems quickly and reliably

Build software to make state of the art deep learning as easy to use as possible, whilst remaining easy to customize for researchers wanting to explore hypotheses

Teach courses so that as many people as possible can use the research results and software

You may well already be familiar with our courses. Hundreds of thousands of people have already taken our Practical Deep Learning for Coders course, and many alumni are now doing amazing work with their new skills, at organizations like Google Brain, OpenAI, and Github. (Many of them now actively contribute to our busy deep learning practitioner discussion forums, along with other members of the wider deep learning community.)

You may also have heard about some of our recent research breakthroughs (with help from our students and collaborators!), including breaking deep learning speed records and achieving a new state of the art in text classification.

The new fastai library

So that covers the research and teaching parts of the three listed areas—but what about software? Today we’re releasing v1.0 of our new fastai deep learning library, which has been under development for the last 18 months. fastai sits on top of PyTorch, which provides the foundation for our work. When we announced the initial development of fastai over one year ago, we described many of the advantages that PyTorch provides us. For instance, we talked about how we could “use all of the flexibility and capability of regular python code to build and train neural networks”, and “we were able to tackle a much wider range of problems”. The PyTorch team has been very supportive throughout fastai’s development, including contributing critical performance optimizations that have enabled key functionality in our software.

fastai is the first deep learning library to provide a single consistent interface to all the most commonly used deep learning applications for vision, text, tabular data, time series, and collaborative filtering. This is important for practitioners, because it means if you’ve learned to create practical computer vision models with fastai, then you can use the same approach to create natural language processing (NLP) models, or any of the other types of model we support.

Google Cloud Platform are making fastai v1 available to all their customers from today in an experimental Deep Learning image for Google Compute Engine, including ready-to-run notebooks and pre-installed datasets. To use it, simply head over to Deep Learning images page on Google Cloud Marketplace and setup configuration for your instance, set framework to PyTorch 1.0RC and click “deploy”. That’s it, you now have the VM with Jupyter Lab, PyTorch 1.0 and fastai on it! Read more about how you can use the images in this post from Google’s Viacheslav Kovalevskyi. And if you want to use fastai in a GPU-powered Jupyter Notebook, it’s now a single click away thanks to fastai support on Salamander, also released today.

Good news too from Bratin Saha, VP, Amazon Web Services: “To support fast.ai’s mission to make the power of deep learning available at scale, the fastai library will soon be available in the AWS Deep Learning AMIs and Amazon SageMaker”. And we’re very grateful for the enthusiasm from Microsoft’s AI CTO, Joseph Sirosh, who said “At Microsoft, we have an ambitious goal to make AI accessible and valuable to every organization. We are happy to see Fast.AI helping democratize deep learning at scale and leveraging the power of the cloud.”

Early users

Semantic code search at GitHub

fast.ai are enthusiastic users of Github’s collaboration tools, and many of the Github team work with fast.ai tools too - even the CEO of Github studies deep learning using our courses! Hamel Husain, a Senior Machine Learning Scientist at Github who has been studying deep learning through fast.ai for the last two years, says:

“The fast.ai course has been taken by data scientists and executives at Github alike ushering in a new age of data literacy at GitHub. It gave data scientists at GitHub the confidence to tackle state of the art problems in machine learning, which were previously believed to be only accessible to large companies or folks with PhDs.”

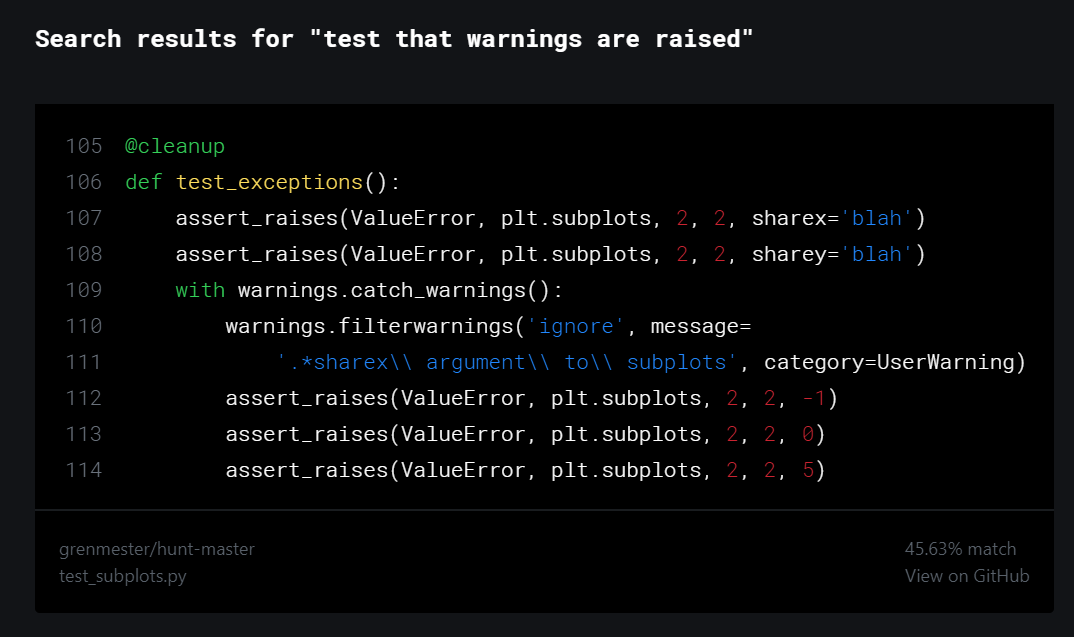

Husain and his colleague Ho-Hsiang Wu recently released a new experimental tool for semantic code search, which allows Github users to find useful code snippets using questions written in plain English. In a blog post announcing the tool, they describe how they switched from Google’s Tensorflow Hub to fastai, because it “gave us easy access to state of the art architectures such as AWD LSTMs, and techniques such as cyclical learning rates with random restarts”.

Husain has been using a pre-release version of the fastai library for the last 12 months. He told us:

“I choose fast.ai because of its modularity, high level apis that implemented state of the art techniques, and innovations that reduce the need for tons of compute but with the same performance characteristics. The semantic code search demo is only the tip of the iceberg, as folks in sales, marketing, fraud are currently leveraging the power of fastai to bring transformative change to their business areas.”

Music generation

One student that stood out in our last fast.ai deep learning course was Christine McLeavey Payne, who had already had a fascinating journey as an award-winning classical pianist with an SF Symphony chamber group, a high performance computing expert in the finance world, and a neuroscience and medical researcher at Stanford. Her journey has only gotten more interesting since, and today she is a Research Fellow at the famous OpenAI research lab. In her most recent OpenAI project, she used fastai to help her create Clara: A Neural Net Music Generator. Here is some of her generated chamber music. Christine says:

“The fastai library is an amazing resource. Even when I was just starting in deep learning, it was easy to get a fastai model up and running in only a few lines of code. At that point, I didn’t understand the state-of-the-art techniques happening under the hood, but still they worked, meaning my models trained faster, and reached significantly better accuracy.”

Christine has even created a human or computer quiz that you can try for yourself; see if you can figure which pieces were generated by her algorithm! Clara is closely based on work she did on language modeling for one of her fast.ai student projects, and leverages the fastai library’s support for recent advances in natural language processing. Christine told us:

“It’s only more recently that I appreciate just how important these details are, and how much work the fastai library saves me. It took me just under two weeks to get this music generation project up and getting great initial results. I’m certain that speed couldn’t have been possible without fastai.”

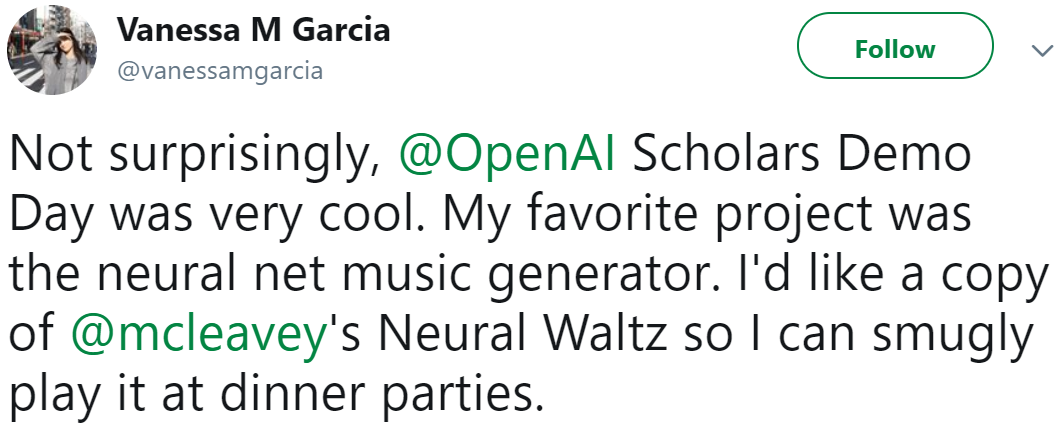

We think that Clara is a great example of the expressive power of deep learning—in this case, a model designed to generate and classify text has been used to generate music, with relatively few modifications. “I took a fastai Language Model almost exactly (very slight changes in sampling the generation) and experimented with ways to write out the music in either a ”notewise” or ”chordwise” encoding” she wrote on Twitter. The result was a crowd favorite, with Vanessa M Garcia, a Senior Researcher at IBM Watson, declaring it her top choice at OpenAI’s Demo Day.

fastai for art projects

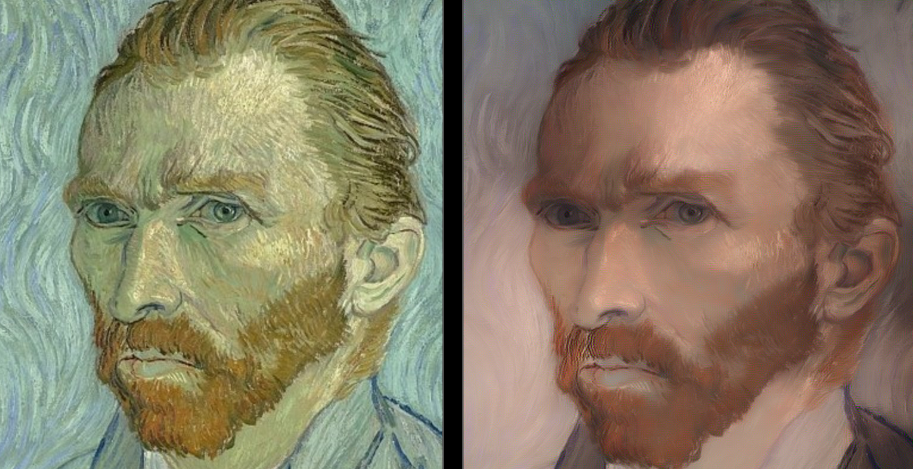

Architect and Investor Miguel Pérez Michaus has been using a pre-release version of fastai to research a system for art experiments that he calls Style Reversion. This is definitely a case where a picture tells a thousand words, so rather than try to explain what it does, I’ll let you see for yourself:

Pérez Michaus says he likes designing with fastai because “I know that it can get me where [Google’s Tensorflow library] Keras can not, for example, whenever something ‘not standard’ has to be achieved”. As an early adopter, he’s seen the development of the library over the last 12 months:

“I was lucky enough to see alpha version of fastai evolving, and even back then its power and flexibility was evident. Additionally, it was fully usable for people like myself, with domain knowledge but no formal Computer Science background. And it only has gotten better. My quite humble intuition about the future of deep learning is that we will need a fine grained understanding of what is really goes on under the hood, and in that landscape I think fastai is going to shine.”

fastai for academic research

Entrepreneurs Piotr Czapla and Marcin Kardas are the co-founders of n-waves, a deep learning consulting company. They used fastai to develop a novel algorithm for text classification in Polish, based on ideas shown in fast.ai’s Cutting Edge Deep Learning for Coders course. Polish is challenging for NLP, since it is a morphologically rich language (e.g. number, gender, animacy, and case are all collapsed into a word’s suffix). The algorithm that Czapla and Kardas developed won first prize in the top NLP academic competition in Poland, and a paper based on this new research will be published soon. According to Czapla, the fastai library was critical to their success:

“I love that fastai works well for normal people that do not have hundreds of servers at their disposal. It supports quick development and prototyping, and has all the best deep learning practices incorporated into it.”

The course and community have also been important for them:

“fast.ai’s courses opened my eyes to deep learning, and helped me to think and develop intuitions around how deep learning really works. Most of the answers to my questions are already on the forum somewhere, just a search away. I love how the notes from the lectures are composed into Wiki topics, and that other students are creating transcriptions of the lessons so that they are easier to find.”

Example: Transfer learning in computer vision

fast.ai’s research is embedded in that fastai library, so you get the benefits of it automatically. Let’s take a look at an example of what that means…

Kaggle’s Dogs vs Cats competition has been a favorite part of our courses since the very start, and it represents an important class of problems: transfer learning of a pre-trained model. So we’ll take a look at how the fastai library goes on this task.

Before we built fastai, we did most of our research and teaching using Keras (with the Tensorflow backend), and we’re still big fans of it. Keras really led the way in showing how to make deep learning easier to use, and it’s been a big inspiration for us. Today, it is (for good reason) the most popular way to train neural networks. In this brief example we’ll compare Keras and fastai on what we think are the three most important metrics: amount of code required, accuracy, and speed.

Here’s all the code required to do 2-stage fine tuning with fastai - not only is there very little code to write, there’s very few parameters to set:

data = data_from_imagefolder(Path('data/dogscats'),

ds_tfms=get_transforms(), tfms=imagenet_norm, size=224)

learn = ConvLearner(data, tvm.resnet34, metrics=accuracy)

learn.fit_one_cycle(6)

learn.unfreeze()

learn.fit_one_cycle(4, slice(1e-5,3e-4))Let’s compare the two libraries on this task (we’ve tried to match our Keras implementation as closely as possible, although since Keras doesn’t support all the features that fastai provides, it’s not identical):

| fastai resnet34* | fastai resnet50 | Keras | |

| Lines of code (excluding imports) | 5 | 5 | 31 |

| Stage 1 error | 0.70% | 0.65% | 2.05% |

| Stage 2 error | 0.50% | 0.50% | 0.80% |

| Test time augmentation (TTA) error | 0.30% | 0.40% | N/A* |

| Stage 1 time | 4:56 | 9:30 | 8:30 |

| Stage 2 time | 6:44 | 12:48 | 17:38 |

* Keras does not provide resnet 34 or TTA

(It’s important to understand that these improved results over Keras in no way suggest that Keras isn’t an excellent piece of software. Quite the contrary! If you tried to complete this task with almost any other library, you would need to write far more code, and would be unlikely to see better speed or accuracy than Keras. That’s why we’re showing Keras in this comparison - because we’re admirers of it, and it’s the strongest benchmark we know of!)

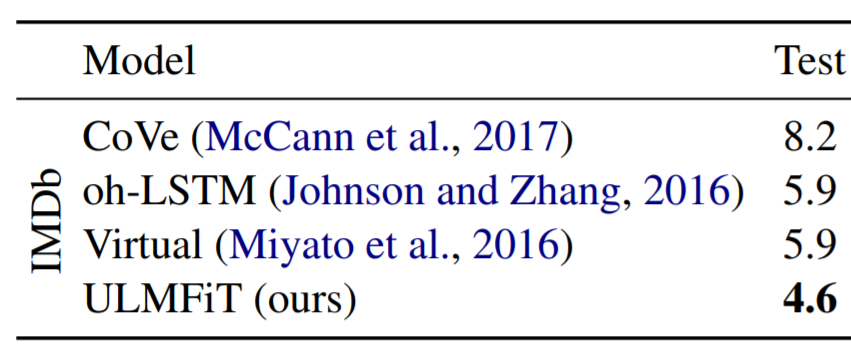

fastai also show similarly strong performance for NLP. The state of the art text classification algorithm is ULMFit. Here’s the relative error of ULMFiT versus previous top ranked algorithms on the popular IMDb dataset, as shown in the ULMFiT paper:

fastai is currently the only library to provide this algorithm. Because the algorithm is built in to fastai, you can match the paper’s results with similar code to that shown above for Dogs vs Cats. Here’s how you train the language model for ULMFiT:

data = data_from_textcsv(LM_PATH, Tokenizer(), data_func=lm_data)

learn = RNNLearner.language_model(data, drop_mult=0.3,

pretrained_fnames=['lstm_wt103', 'itos_wt103'])

learn.freeze()

learn.fit_one_cycle(1, 1e-2, moms=(0.8,0.7))

learn.unfreeze()

learn.fit_one_cycle(10, 1e-3, moms=(0.8,0.7), pct_start=0.25)Under the hood - pytorch v1

A critical component of fastai is the extraordinary foundation provided by PyTorch, v1 (preview) of which is also being released today. fastai isn’t something that replaces and hides PyTorch’s API, but instead is designed to expand and enhance it. For instance, you can create new data augmentation methods by simply creating a function that does standard PyTorch tensor operations; here’s the entire definition of fastai’s jitter function:

def jitter(c, size, magnitude:uniform):

return c.add_((torch.rand_like(c)-0.5)*magnitude*2)As another example, fastai uses and extends PyTorch’s concise and expressive Dataset and DataLoader classes for accessing data. When we wanted to add support for image segmentation problems, it was as simple as defining this standard PyTorch Dataset class:

class MatchedFilesDataset(DatasetBase):

def __init__(self, x:Collection[Path], y:Collection[Path]):

assert len(x)==len(y)

self.x,self.y = np.array(x),np.array(y)

def __getitem__(self, i):

return open_image(self.x[i]), open_mask(self.y[i])This means that as practitioners want to dive deeper into their models, data, and training methods, they can take advantage of all the richness of the full PyTorch ecosystem. Thanks to PyTorch’s dynamic nature, programmers can easily debug their models using standard Python tools. In many areas of deep learning, PyTorch is the most common platform for researchers publishing their research; fastai makes it simple to test our these new approaches.

Under the hood - fastai

In the coming months we’ll be publishing academic papers and blog posts describing the key pieces of the fastai library, as well as releasing a new course that will walk students through how the library was developed from scratch. To give you a taste, we’ll touch on a couple of interesting pieces here, focussing on computer vision.

One thing we care a lot about is speed. That’s why we competed in Stanford’s DAWNBench competition for rapid and accurate model training, where (along with our collaborators) we have achieved first place in every category we entered. If you want to match our top single-machine CIFAR-10 result, it’s as simple as four lines of code:

tfms = ([pad(padding=4), crop(size=32, row_pct=(0,1), col_pct=(0,1)),

flip_lr(p=0.5)], [])

data = data_from_imagefolder('data/cifar10', valid='test',

ds_tfms=tfms, tfms=cifar_norm)

learn = Learner(data, wrn_22(), metrics=accuracy).to_fp16()

learn.fit_one_cycle(25, wd=0.4)Much of the magic is buried underneath that to_fp16() method call. Behind the scenes, we’re following all of Nvidia’s recommendations for mixed precision training. No other library that we know of provides such an easy way to leverage Nvidia’s latest technology, which gives two to three times better performance compared to previous approaches.

Another thing we care a lot about is accuracy. We want your models to work well not just on your training data, but on new test data as well. Therefore, we’ve built an entirely new computer vision library from scratch that makes it easy to develop and use data augmentation methods, to improve your model’s performance on unseen data. The new library uses a new approach to minimize the number of lossy transformations that your data goes through. For instance, take a look at the three images below:

On the left is the original low resolution image from the CIFAR-10 dataset. In the middle is the result of zooming and rotating this image using standard deep learning augmentation libraries. On the right is the same zoom and rotation, using fastai v1. As you can see, with fastai the detail is kept much better; for instance, take a look at how the pilot’s window is much crisper in the right-hand image than the middle image. This change to how data augmentation is applied means that practitioners using fastai can use far more augmentation than users of other libraries, resulting in models that generalize better.

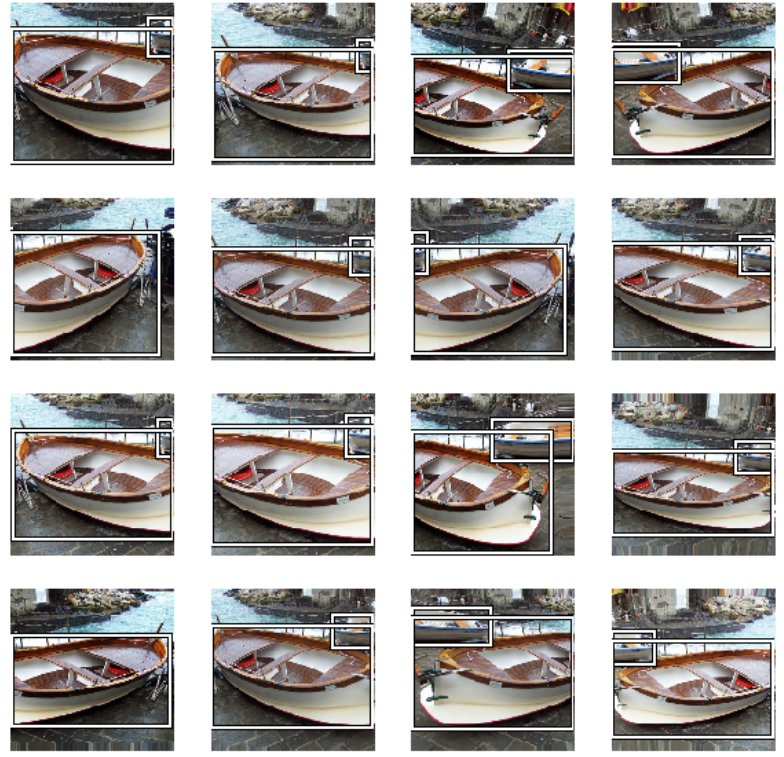

These data augmentations even work automatically with non-image data such as bounding boxes. For instance, here’s an example of how fastai’s works with an image detection dataset, automatically tracking each bounding box through all augmentations:

These kinds of thoughtful features can be found throughout the fastai library. Over the coming months we’ll be doing deep dives in to many of them, for those of you interested in the details of how fastai is implemented behind the scenes.

Thanks!

Many thanks to the PyTorch team. Without PyTorch, none of this would have been possible. Thanks also to Amazon Web Services, who sponsored fast.ai’s first Researcher in Residence, Sylvain Gugger, who has contributed much of the development of fastai v1. Thanks also to fast.ai alumni Fred Monroe, Andrew Shaw, and Stas Bekman, who have all made significant contributions, to Yaroslav Bulatov, who was a key contributor to our most recent DAWNBench project, to Viacheslav Kovalevskyi, who handled Google Cloud integration, and of course to all the students and collaborators who have helped make the community and software successful.