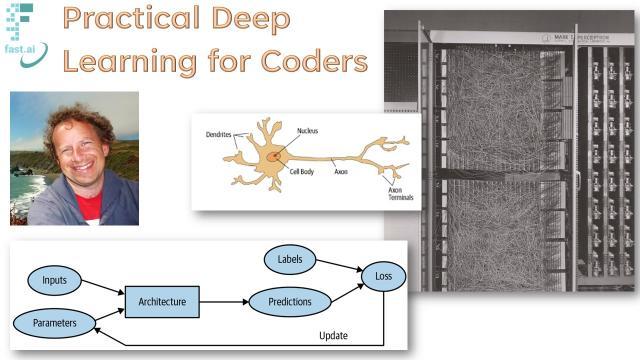

A new edition

Today we’re releasing Practical Deep Learning for Coders 2022—a complete from-scratch rewrite of fast.ai’s most popular course, that’s been two years in the making. Previous fast.ai courses have been studied by hundreds of thousands of students, from all walks of life, from all parts of the world. fast.ai’s videos have been viewed over 6,000,000 times already! The major differences are:

- A much bigger focus on interactive explorations. Students in the course build simple GUIs for building decision trees, linear classifiers, and non-linear models by hand, using that experience to develop a deep intuitive understanding of how foundational algorithms work

- A broader mix of libraries and services are used, including the Hugging Face ecosystem (Transformers, Datasets, Spaces, and the Model Hub), Scikit Learn, and Gradio

- Coverage of new architectures, such as ConvNeXt, Visual Transformers (ViT), and DeBERTa v3

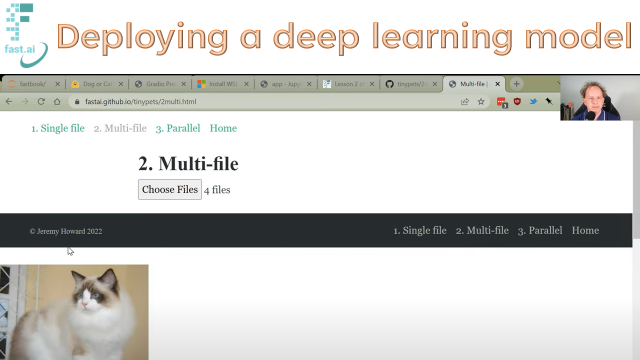

By the end of the second lesson, students will have built and deployed their own deep learning model on their own data. Many students post their course projects to our forum. For instance, if there’s an unknown dinosaur in your backyard, maybe you need this dinosaur classifier!

Topics covered in this year’s course include:

- Build and train deep learning models for computer vision, natural language processing, tabular analysis, and collaborative filtering problems

- Create random forests and regression models

- How to turn your models into web applications, and deploy them

- Use PyTorch, the world’s fastest growing deep learning software, plus popular libraries like fastai and Hugging Face

- Why and how deep learning models work, and how to use that knowledge to improve the accuracy, speed, and reliability of your models

- The latest deep learning techniques that really matter in practice

- How to implement the fundamentals of deep learning, including stochastic gradient descent and a complete training loop, from scratch

About the course

There are 9 lessons, and each lesson is around 90 minutes long. The course is based on our 5-star rated book, which is freely available online. No special hardware or software is needed — the course shows how to use free resources for both building and deploying models. University math isn’t needed either — the necessary calculus and linear algebra is introduced as needed during the course.

The course is taught by me, Jeremy Howard. I lead the development of fastai, the software used throughout this course. I have been using and teaching machine learning for around 30 years. I was the top-ranked competitor globally in machine learning competitions on Kaggle (the world’s largest machine learning community) two years running. Following this success, I became the President and Chief Scientist of Kaggle. Since first using neural networks over 25 years ago, I have led many companies and projects that have machine learning at their core, including founding the first company to focus on deep learning and medicine, Enlitic (chosen by MIT Tech Review as one of the “world’s smartest companies”), and Optimal Decisions, the first company to develop a fully optimised pricing algorithm for insurance.

Students and results

Many students have told us about how they’ve become multiple gold medal winners of international machine learning competitions, received offers from top companies, and having research papers published. For instance, Isaac Dimitrovsky told us that he had “been playing around with ML for a couple of years without really grokking it… [then] went through the fast.ai part 1 course late last year, and it clicked for me”. He went on to achieve first place in the prestigious international RA2-DREAM Challenge competition! He developed a multistage deep learning method for scoring radiographic hand and foot joint damage in rheumatoid arthritis, taking advantage of the fastai library.

Alumni of previous editions of Practical Deep Learning for Coders have gone on to jobs at organizations like Google Brain, OpenAI, Adobe, Amazon, and Tesla, published research at top conferences such as NeurIPS, and created startups using skills they learned here. Petro Cuenca, lead developer of the widely-acclaimed Camera+ app, after completing the course went on to add deep learning features to his product, which was then featured by Apple for its “machine learning magic”.

Peter Norvig, author of Artificial Intelligence: A Modern Approach and previously the Director of Research at Google, reviewed our book (which this course is based on) and had this to say:

‘Deep Learning is for everyone’ we see in Chapter 1, Section 1 of this book, and while other books may make similar claims, this book delivers on the claim. The authors have extensive knowledge of the field but are able to describe it in a way that is perfectly suited for a reader with experience in programming but not in machine learning. The book shows examples first, and only covers theory in the context of concrete examples. For most people, this is the best way to learn. The book does an impressive job of covering the key applications of deep learning in computer vision, natural language processing, and tabular data processing, but also covers key topics like data ethics that some other books miss.

About deep learning

Deep learning is a computer technique to extract and transform data–-with use cases ranging from human speech recognition to animal imagery classification–-by using multiple layers of neural networks. A lot of people assume that you need all kinds of hard-to-find stuff to get great results with deep learning, but as you’ll see in this course, those people are wrong. Here’s a few things you absolutely don’t need to do world-class deep learning:

| Myth (don’t need) | Truth |

|---|---|

| Lots of math | Just high school math is sufficient |

| Lots of data | We’ve seen record-breaking results with <50 items of data |

| Lots of expensive computers | You can get what you need for state of the art work for free |

The lessons

1: Getting started

In the first five minutes you’ll see a complete end to end example of training and using a model that’s so advanced it was considered at the cutting edge of research capabilities in 2015! We discuss what deep learning and neural networks are, and what they’re useful for.

We look at examples of deep learning for computer vision object classification, segmentation, tabular analysis, and collaborative filtering.

2: Deployment

This lesson shows how to design yown machine learning project, create your own dataset, train a model using your data, and finally deploy an application on the web. We use Hugging Face Space with Gradio for deployment, and also use JavaScript to implement an interface in the browser. (Deploying to other services looks very similar to the approach in this lesson.)

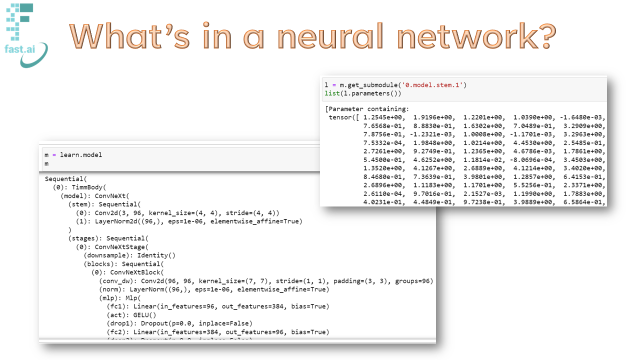

3: Neural net foundations

Lesson 3 is all about the mathematical foundations of deep learning, such as Stochastic gradient descent (SGD), matrix products, and the flexibility of linear functions layered with non-linear activation functions. We focus particularly on a popular combination called the Rectified linear function (ReLU).

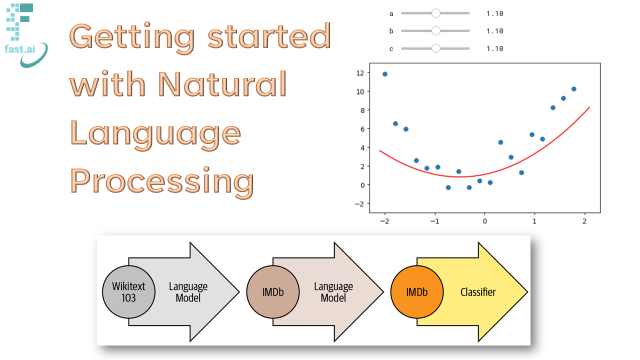

4: Natural Language (NLP)

We look at how to analyse natural language documents using Natural Language Processing (NLP). We be focus on the Hugging Face ecosystem, especially the Transformers library, and the vast collection of pretrained NLP models. The project for this lesson is to classify that similarity of phrases used to describe US patents. A similar approach can be applied to a wide variety of practical issues, in fields as wide-reaching as marketing, logistics, and medicine.

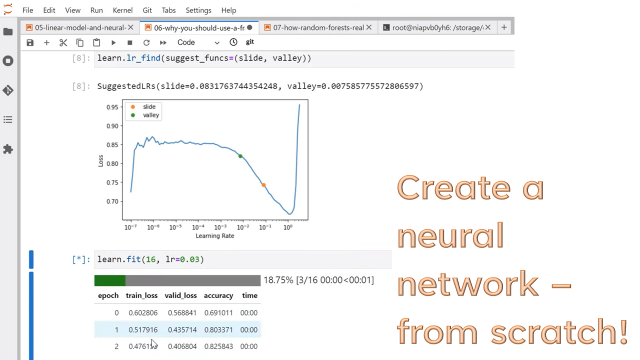

5: From-scratch model

In this lesson we look at how to create a neural network from scratch using Python and PyTorch, and how to implement a training loop for optimising the weights of a model. We build up from a single layer regression model up to a neural net with one hidden layer, and then to a deep learning model. Along the way we also look at how we can use a special function called sigmoid to make binary classification models easier to train, and we learn about metrics.

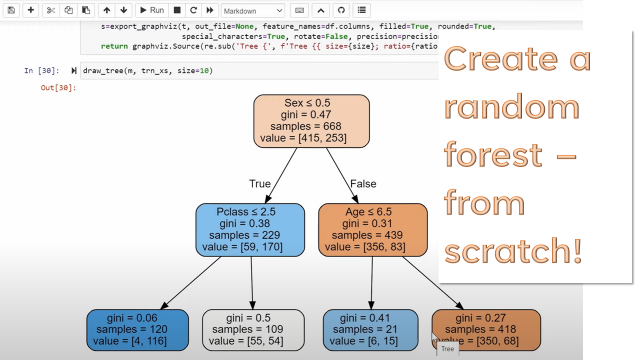

6: Random forests

Random forests started a revolution in machine learning 20 years ago. For the first time, there was a fast and reliable algorithm which made almost no assumptions about the form of the data, and required almost no preprocessing. In lesson 6, you’ll learn how a random forest really works, and how to build one from scratch. And, just as importantly, you’ll learn how to interpret random forests to better understand your data.

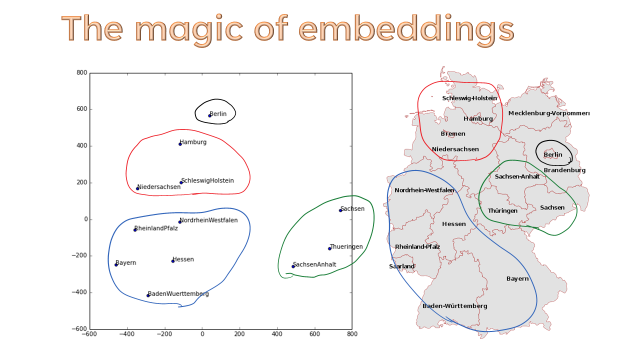

7: Collaborative filtering and embeddings

You interact nearly every day with recommendation systems—algorithms which guess what products and services you might like, based on your past behavior. These systems largely rely on collaborative-filtering, an approach based on linear algebra that fills in the missing values in a matrix. In this lesson we’ll see two ways to do this: one based on a classic linear algebra formulation, and one based on deep learning. We finish off our study of collaborative filtering by looking closely at embeddings—a critical building block of many deep learning algorithms.

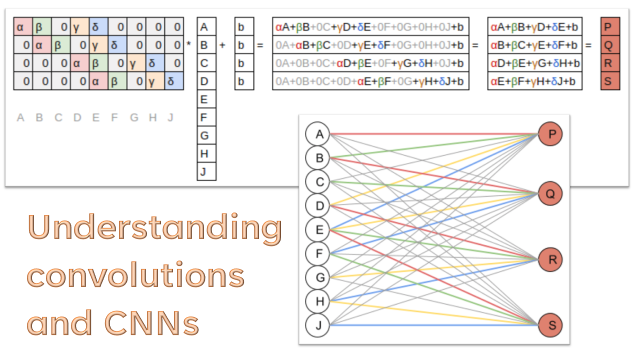

8: Convolutions (CNNs)

Here we dive into convolutional neural networks (CNNs) and see how they really work. We used plenty of CNNs in earlier lessons, but we didn’t peeked inside them to see what’s really going on in there.

As well as learning about the most fundamental building block of CNNs, the convolution, we also look at pooling, dropout, and more.

A vibrant community

Many fast.ai alumni have told us that one of their favorite things about the course is the generous and thoughtful community of interesting people that has sprung up around it.

If you need help, or just want to chat about what you’re learning about (or show off what you’ve built!), there’s a wonderful online community ready to support you at forums.fast.ai. Every lesson has a dedicated forum thread—with many common questions already answered.

For real-time conversations about the course, there’s also a very active Discord server.

Get started

To get started with the course now, head over to Practical Deep Learning for Coders 2022!