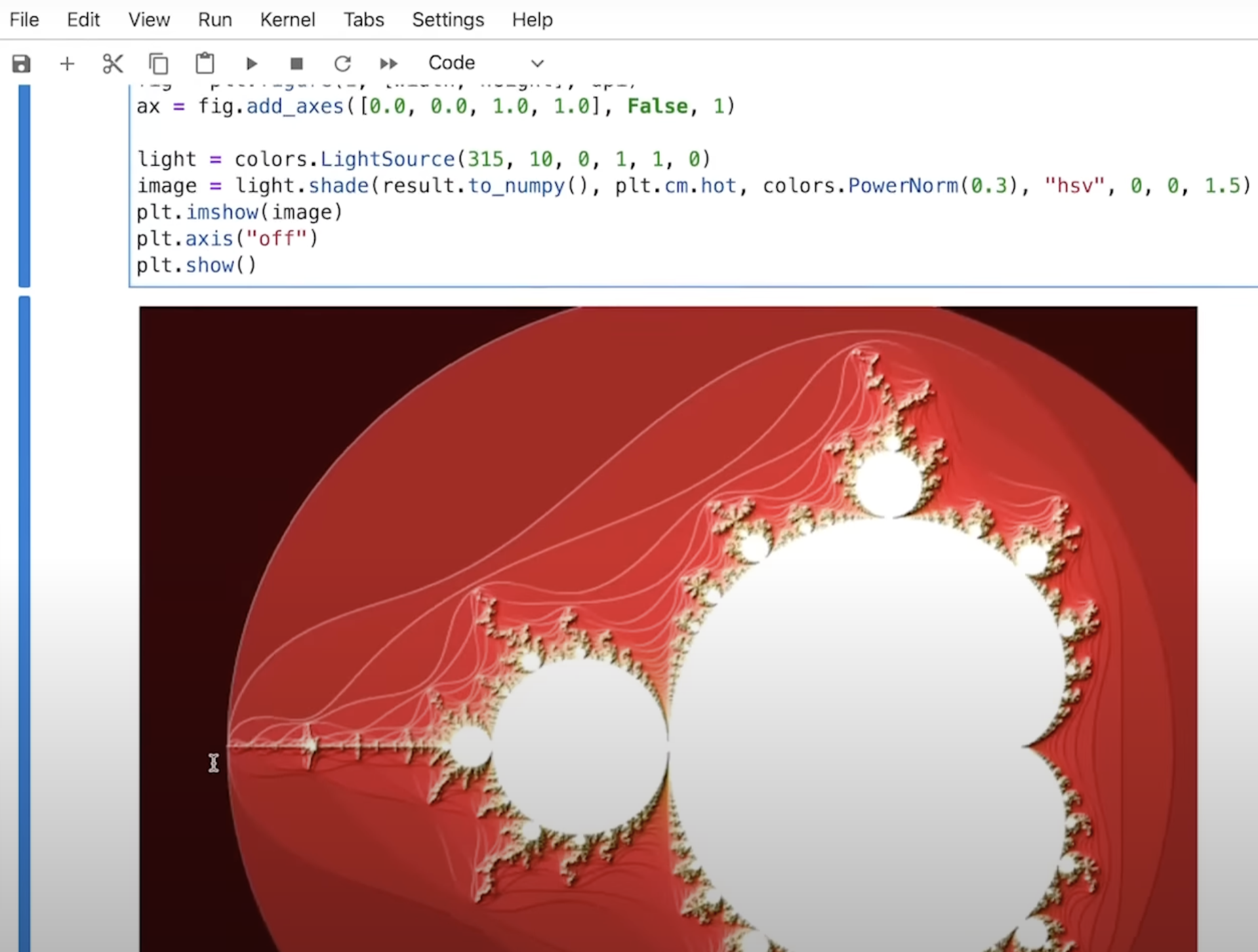

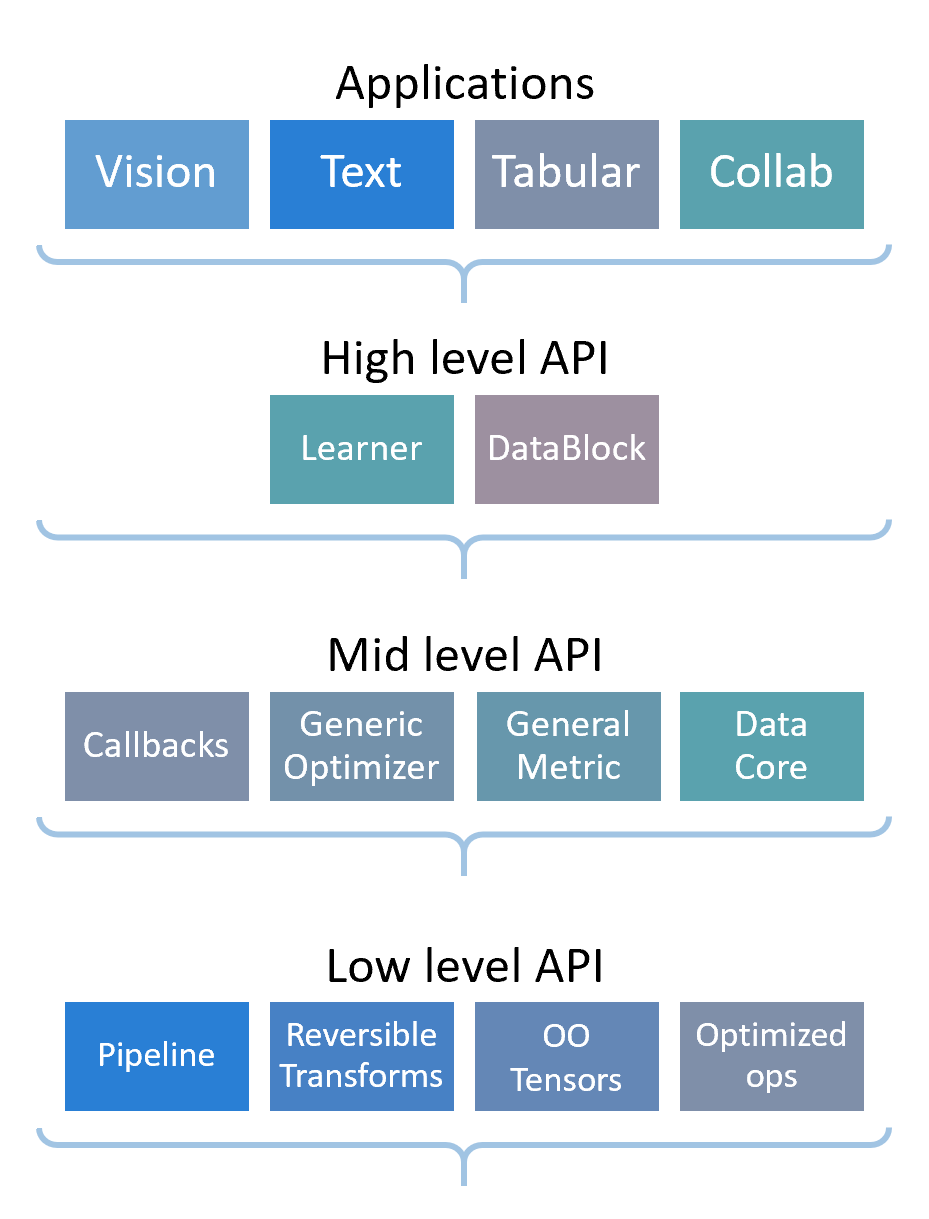

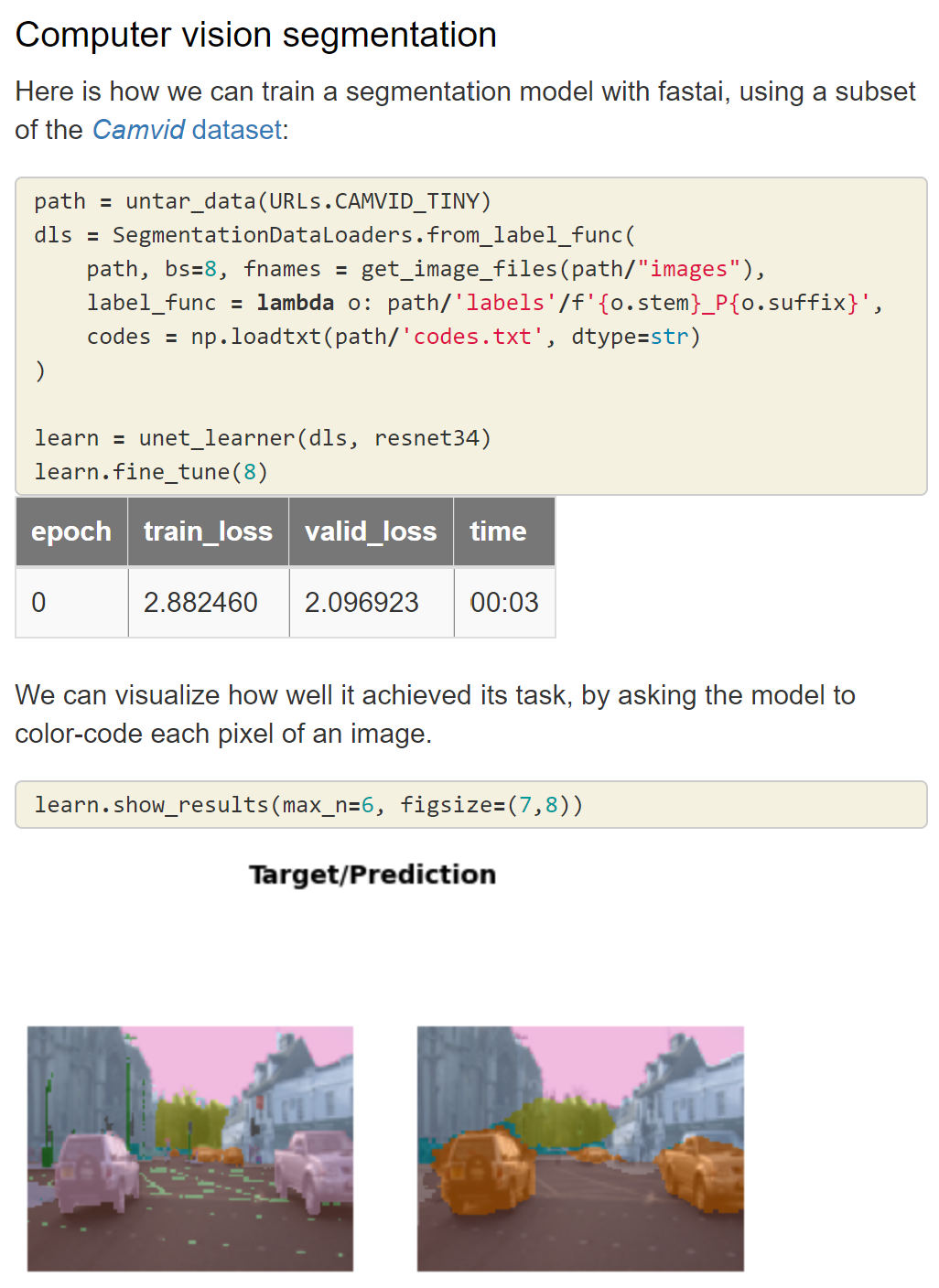

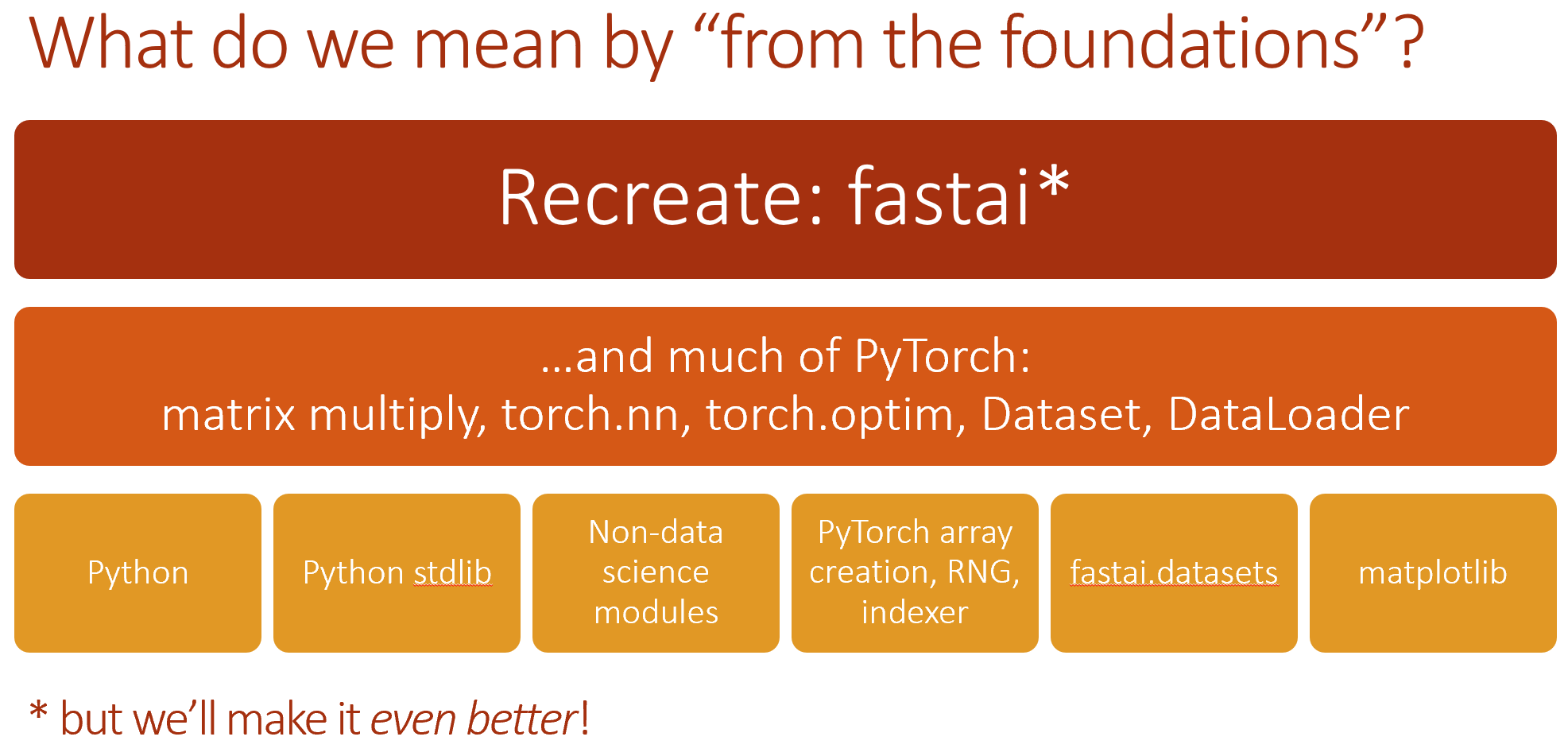

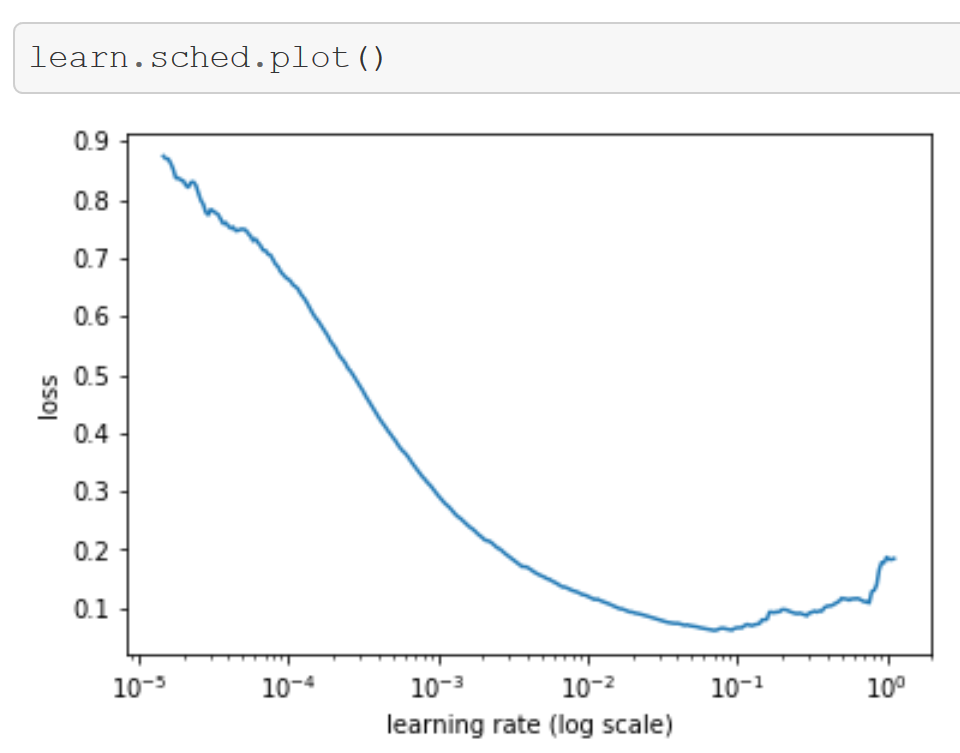

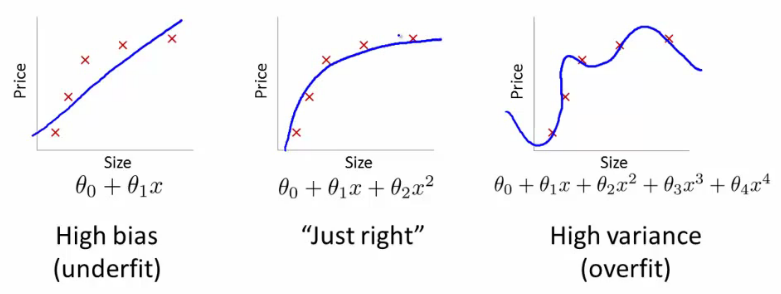

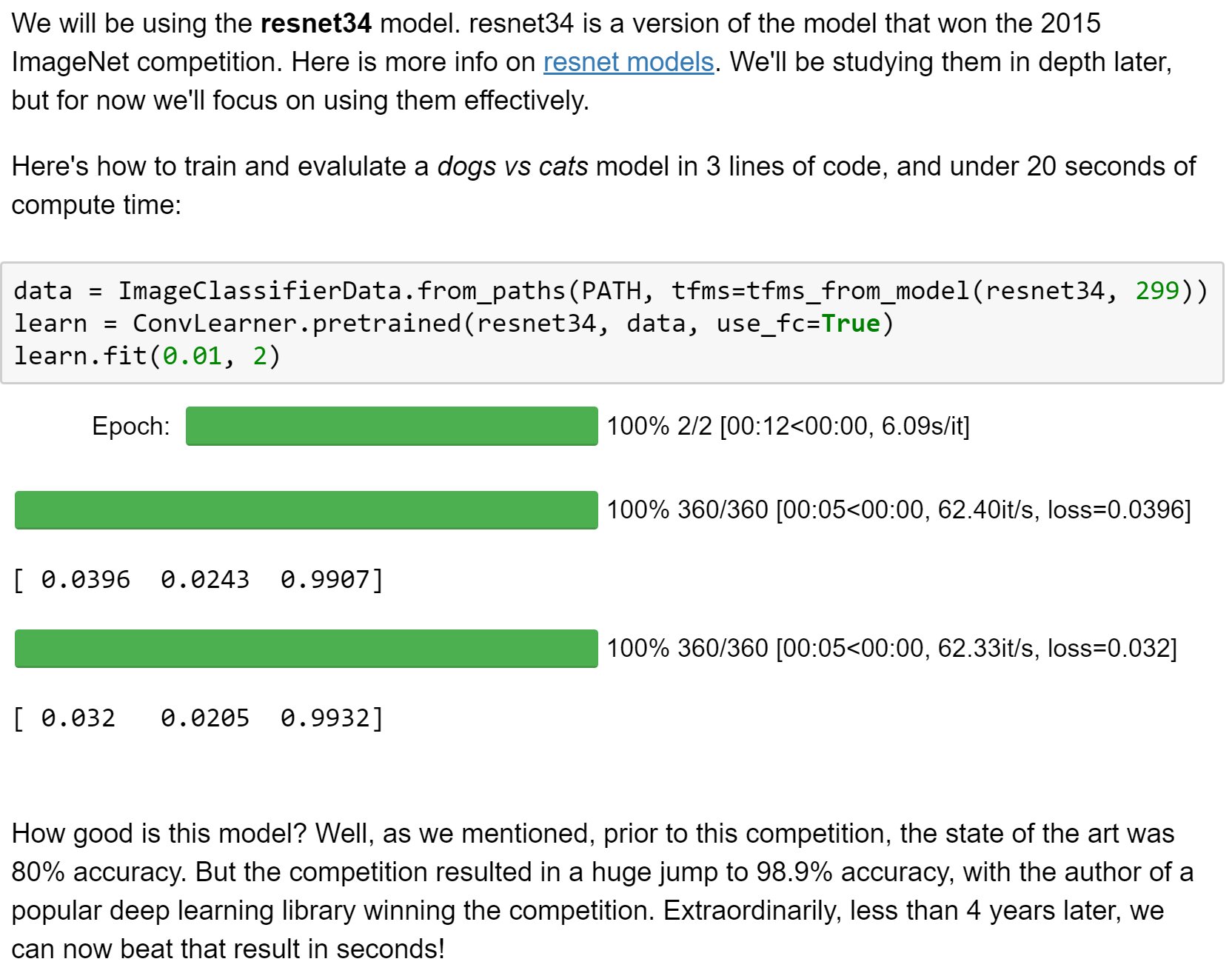

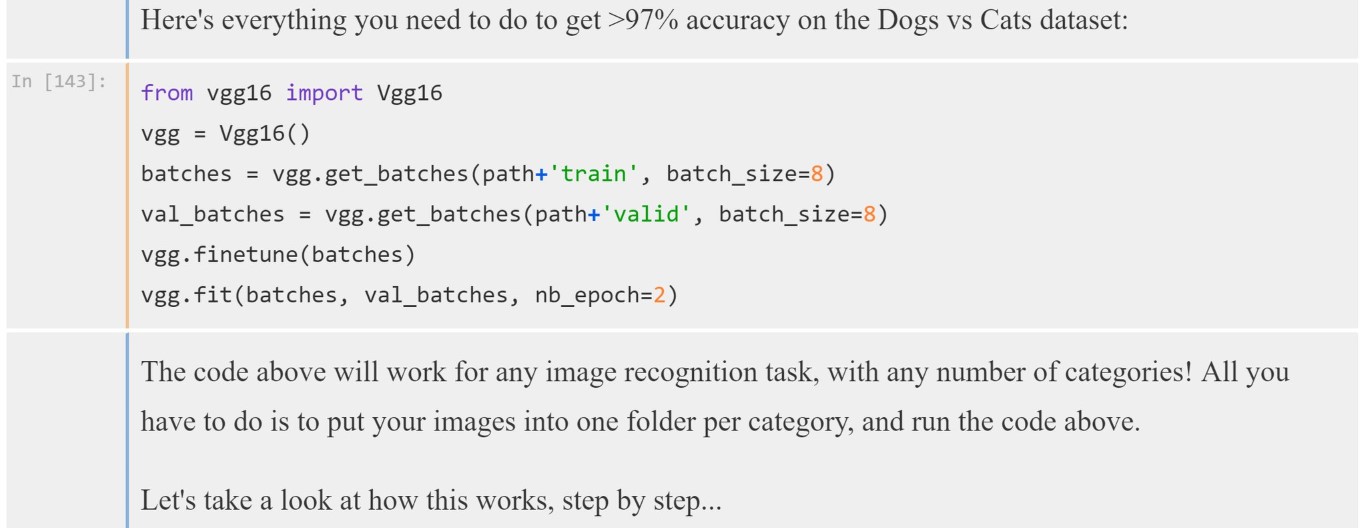

- Courses: How to Solve it With Code; Practical Deep Learning for Coders

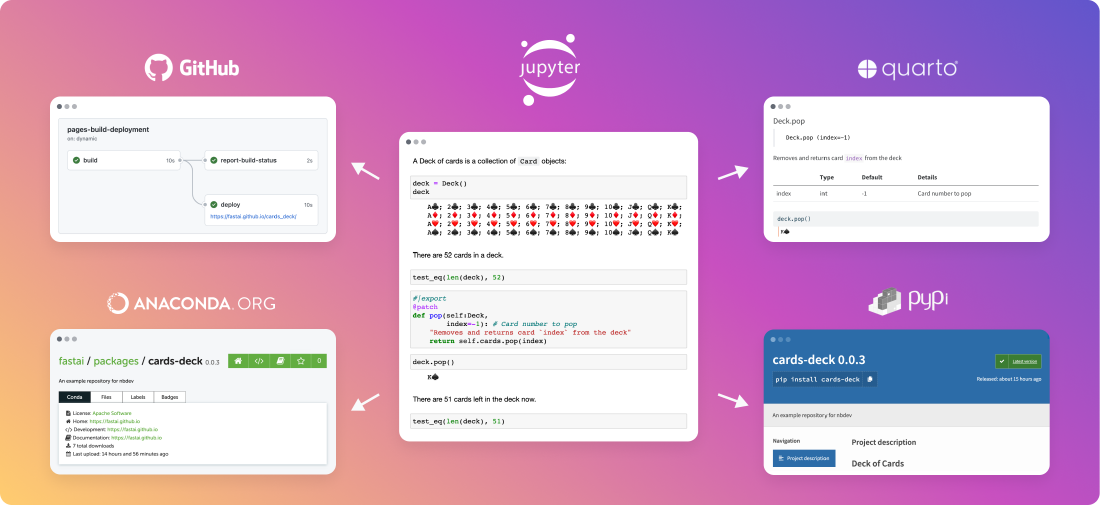

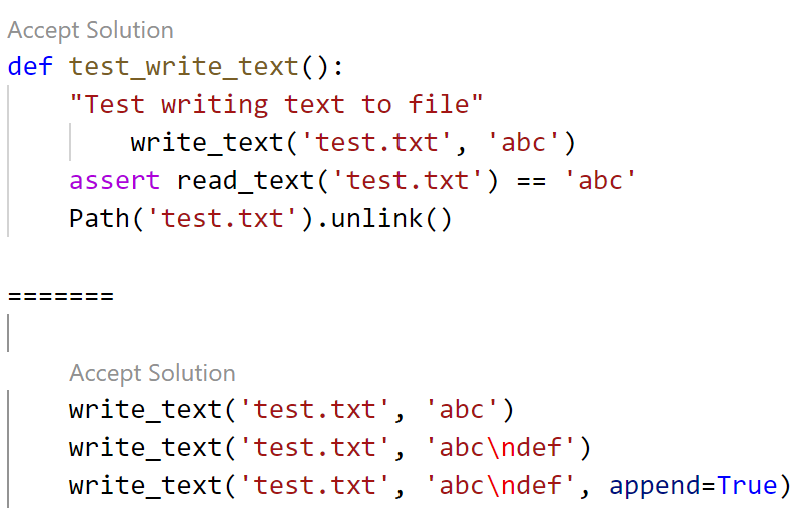

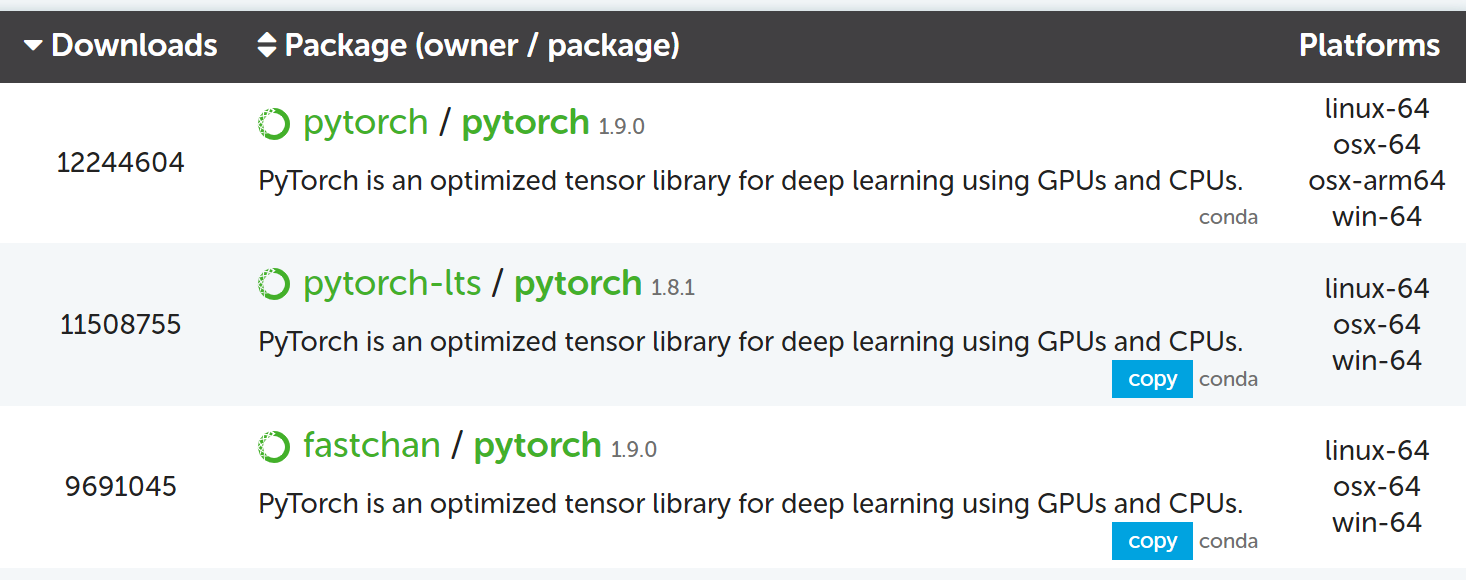

- Software: fastai for PyTorch; nbdev

- Book: Practical Deep Learning for Coders with fastai and PyTorch

- In the news: The Economist; The New York Times; MIT Tech Review

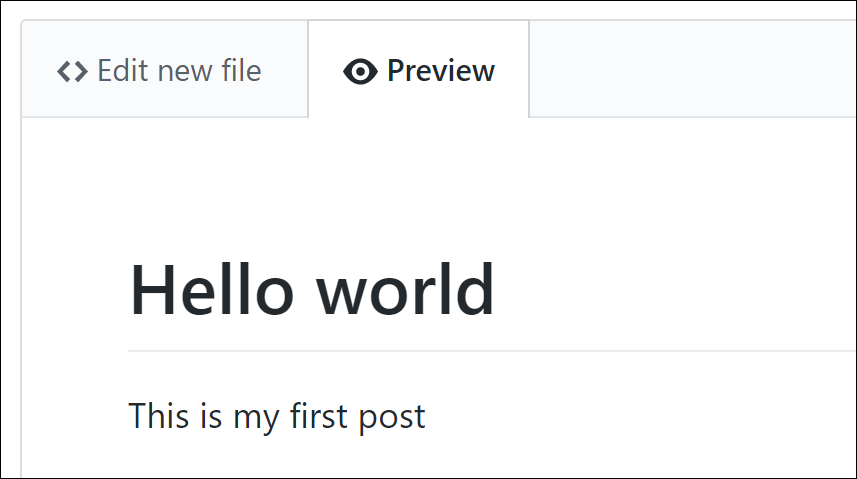

Blog

Breaking the Spell of Vibe Coding

Sinister variations on the positive state of flow

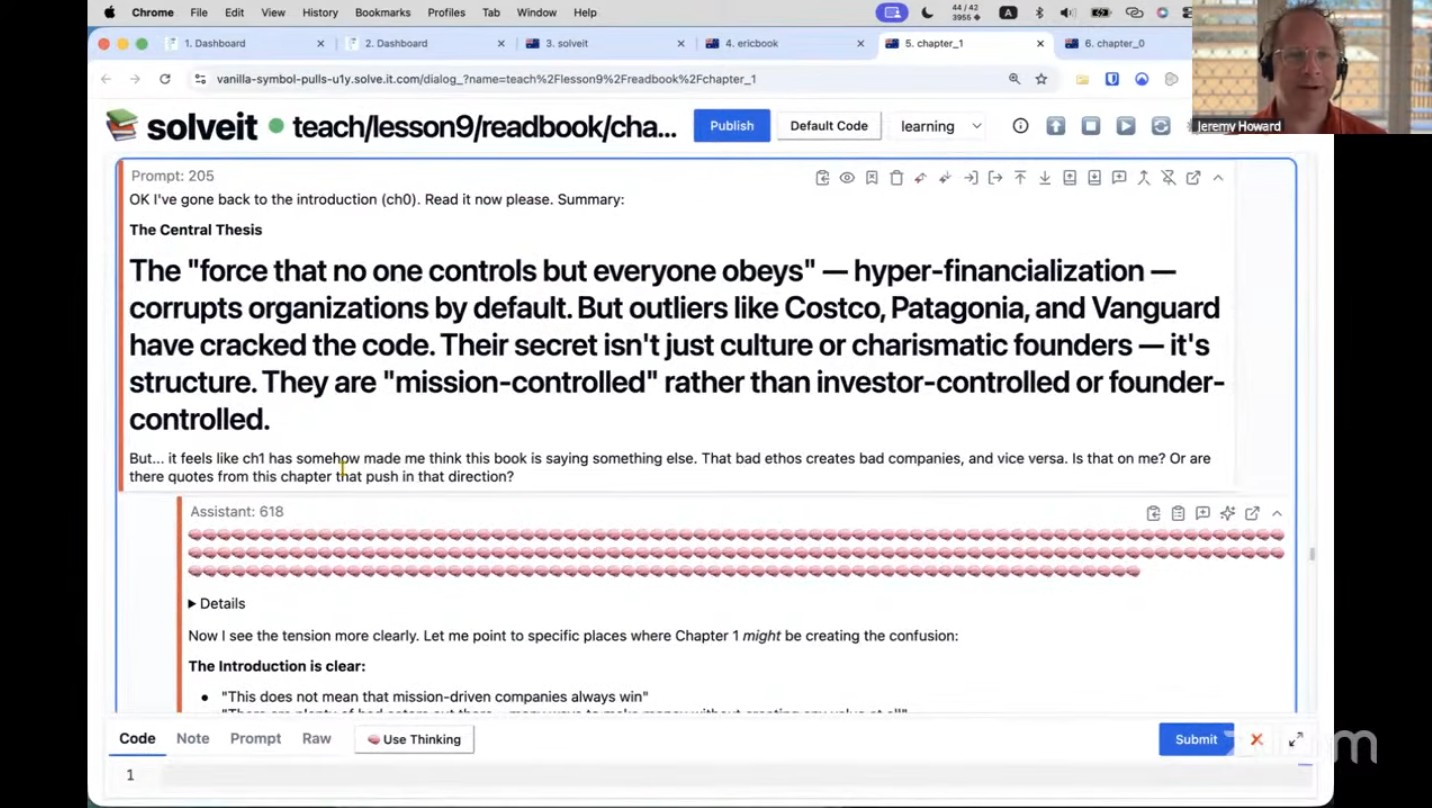

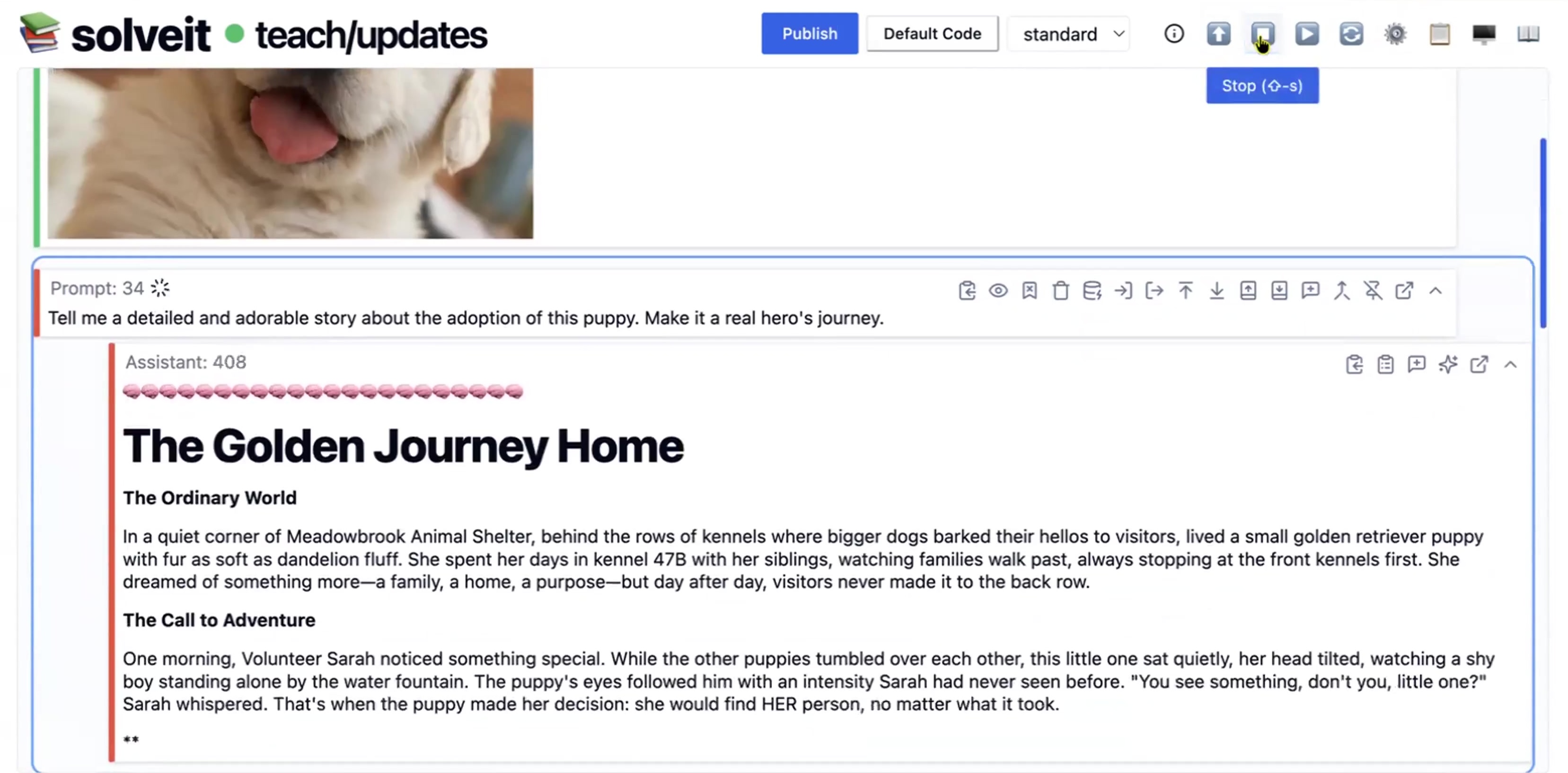

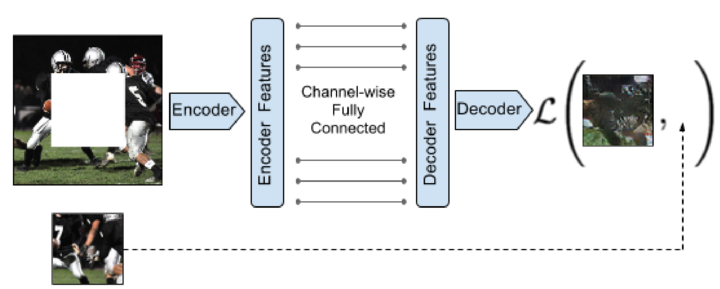

How To Use AI for the Ancient Art of Close Reading

Experiments in reading with LLMs

Qualitative humanities research is crucial to AI

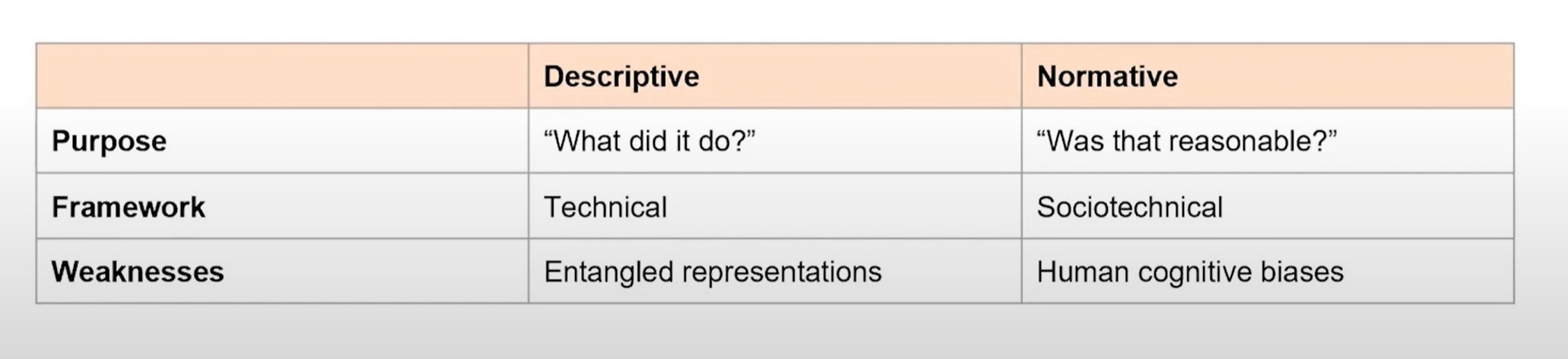

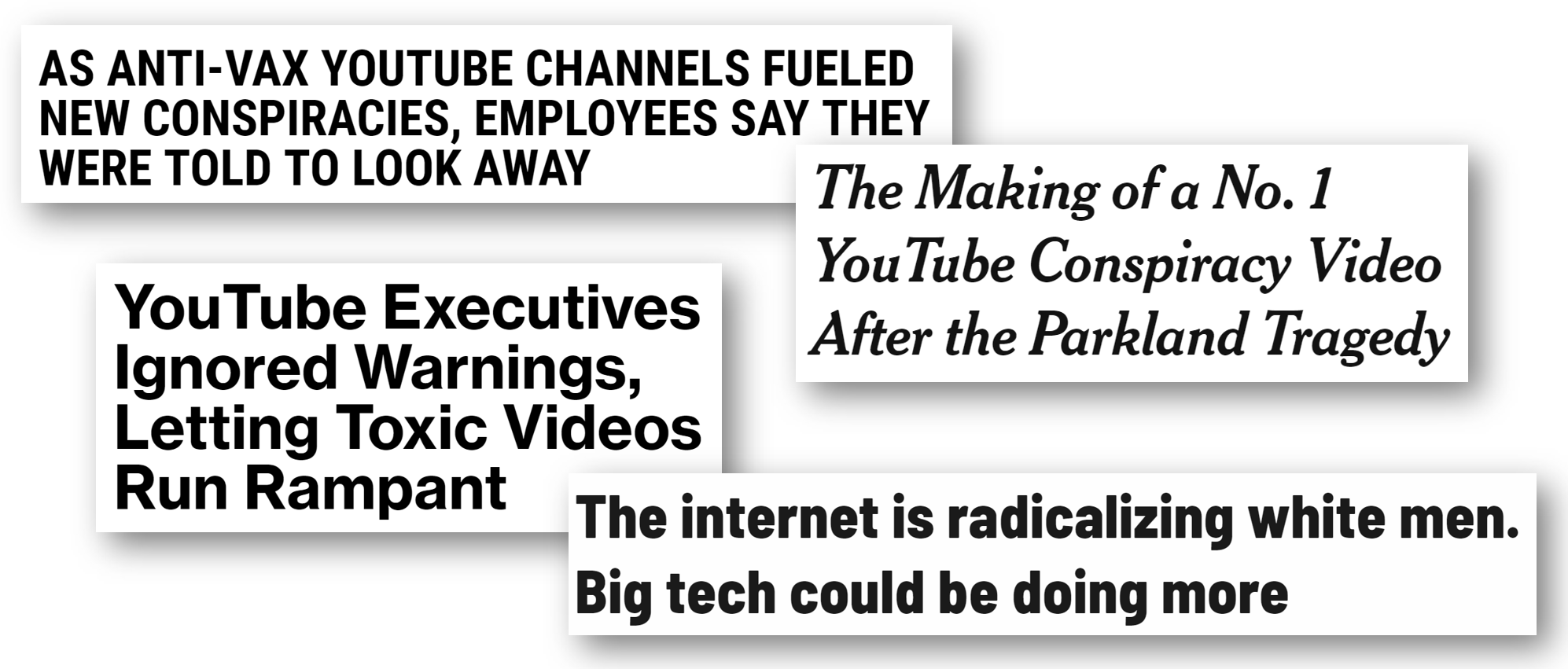

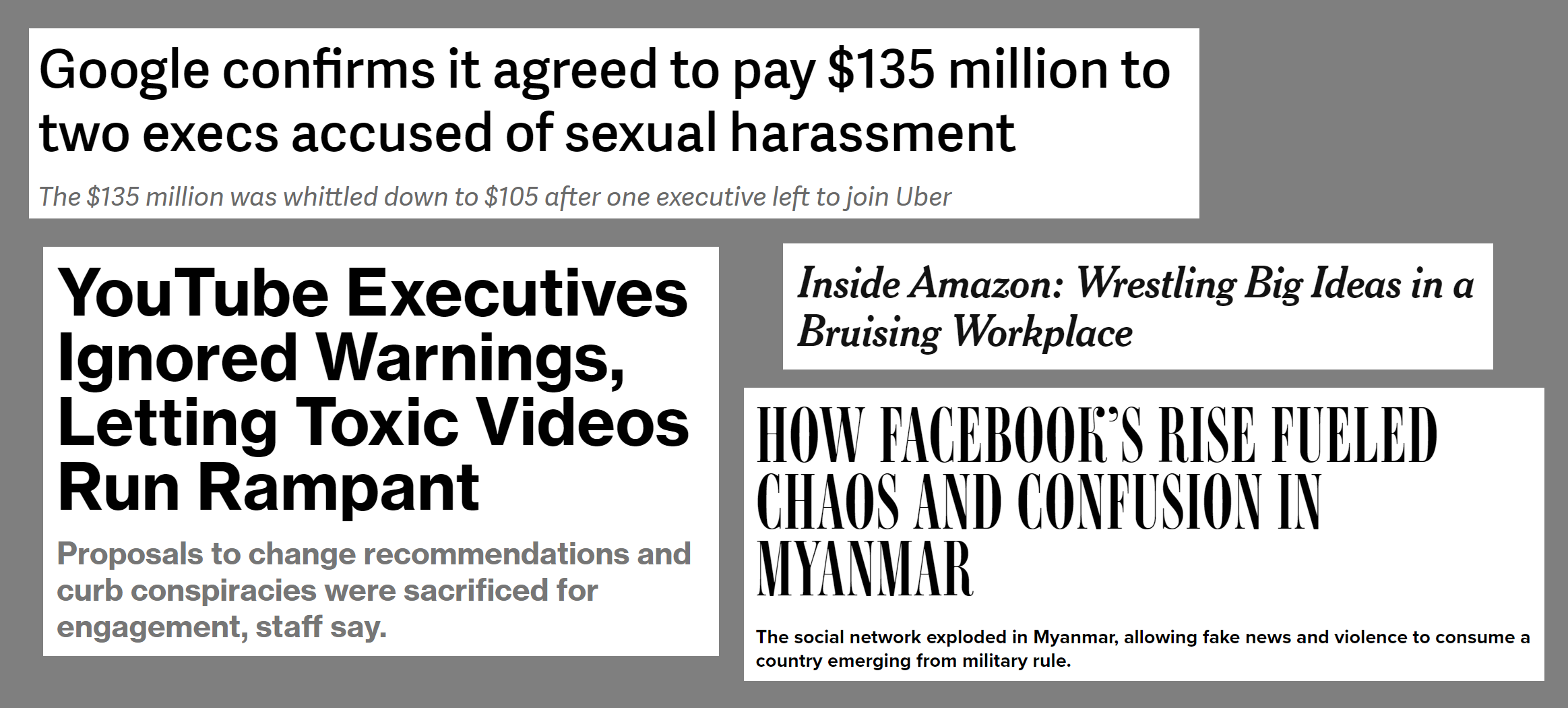

Following the thread of any seemingly quantitative issue in AI ethics (such as determining if software to rate loan applicants is racially biased or evaluating YouTube’s recommendation system) quickly leads to a host of qualitative questions. Unfortunately, there is often a large divide between computer scientists and social scientists, with over-simplified assumptions and fundamental misunderstandings of one another.

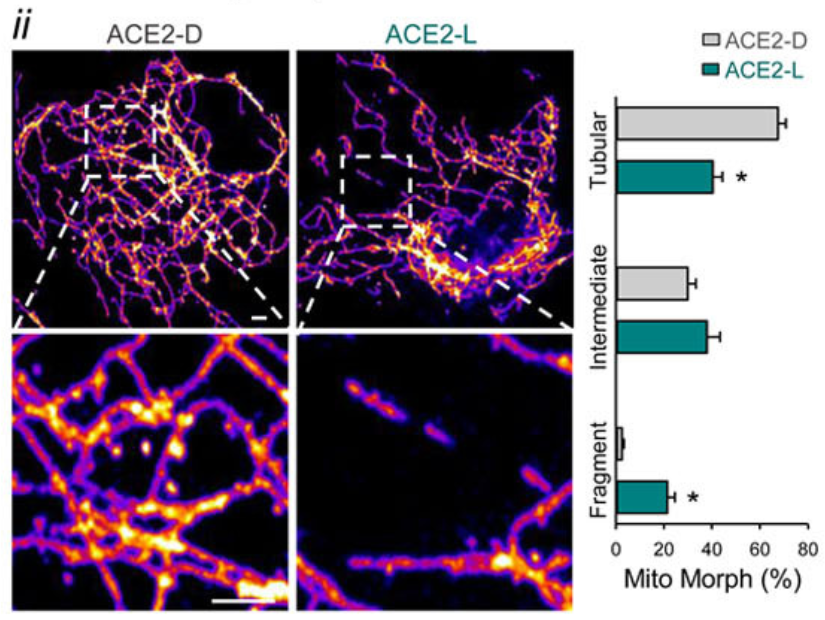

SARS-CoV-2 Spike Protein Impairment of Endothelial Function Does Not Impact Vaccine Safety

All approved SARS-CoV-2 vaccines provide far more benefits than risks. The very rare risk of VITT from the AZ and JJ vaccines is not due to the spike proteins, but is most likely due to details of their formulation.

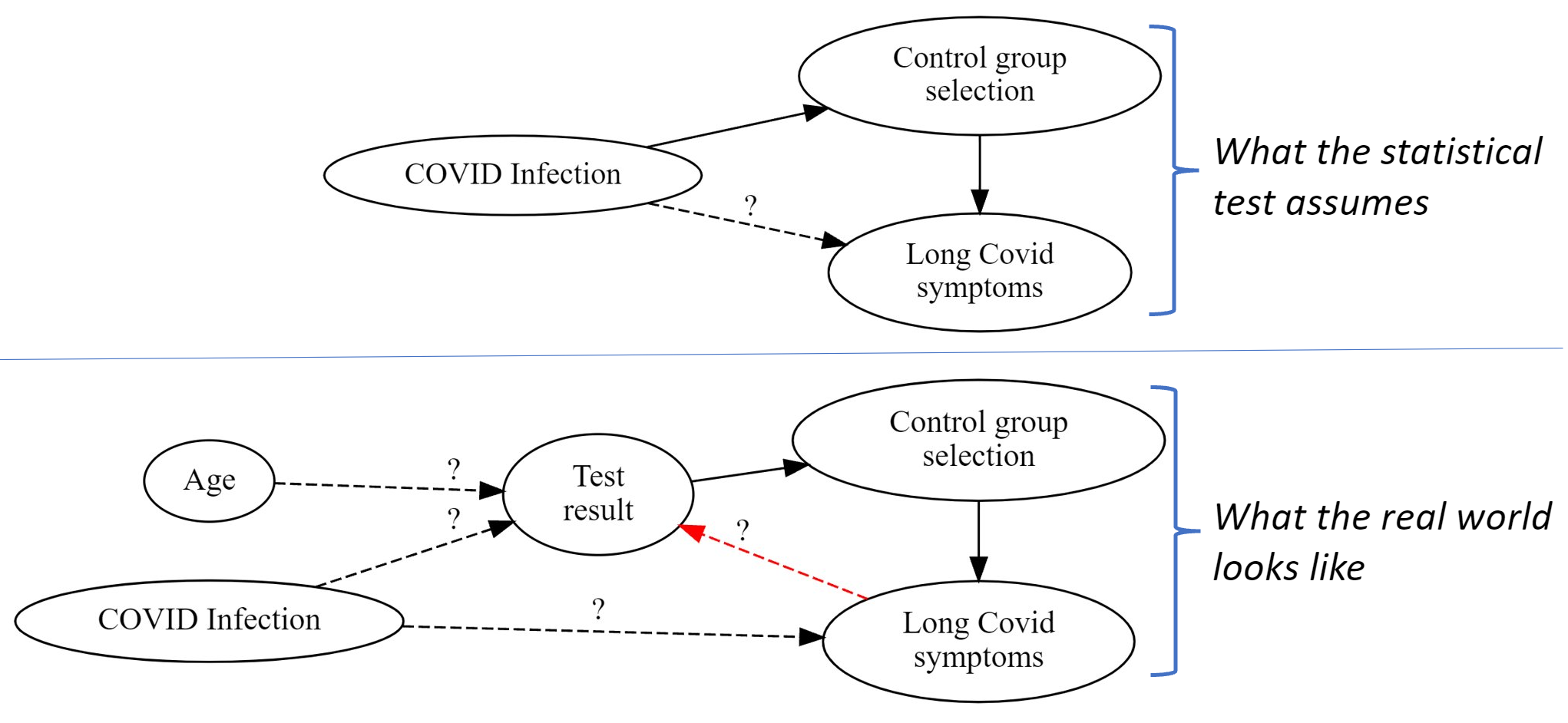

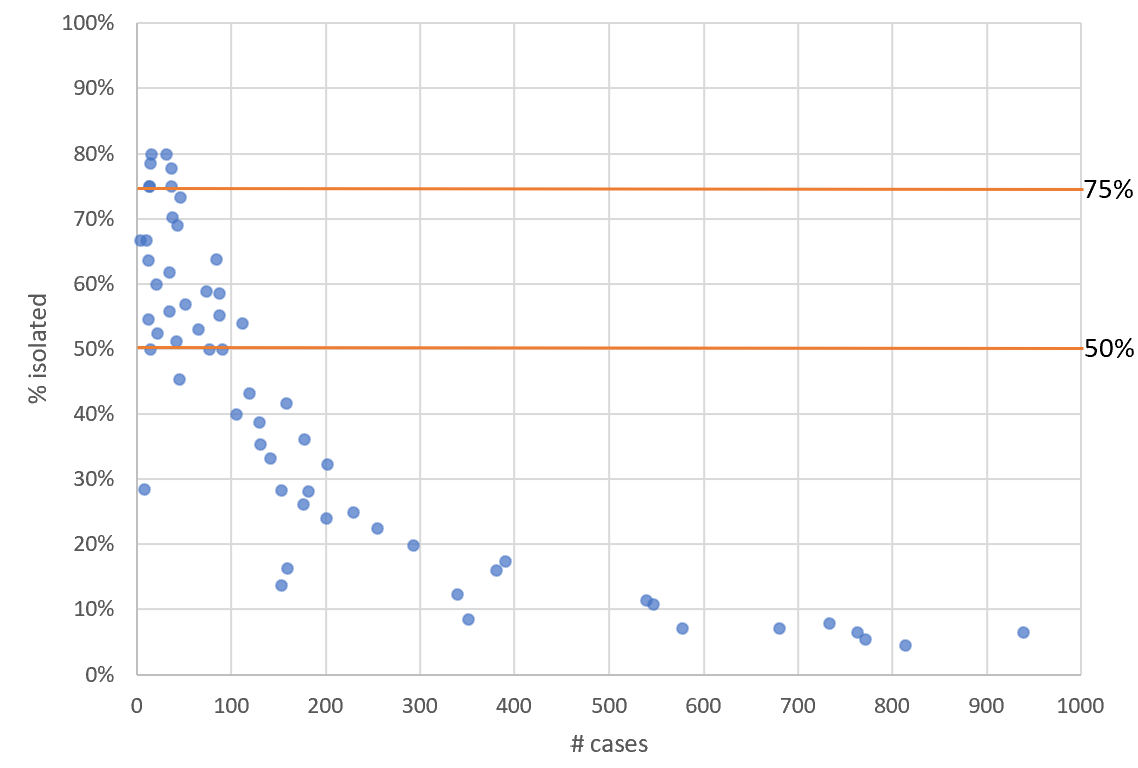

Statistical problems found when studying Long Covid in kids

Statistical tests need to be paired with proper data and study design to yield valid results. A recent review paper on Long Covid in children provides a useful example of how researchers can get this wrong. We use causal diagrams to decompose the problem and illustrate where errors were made.

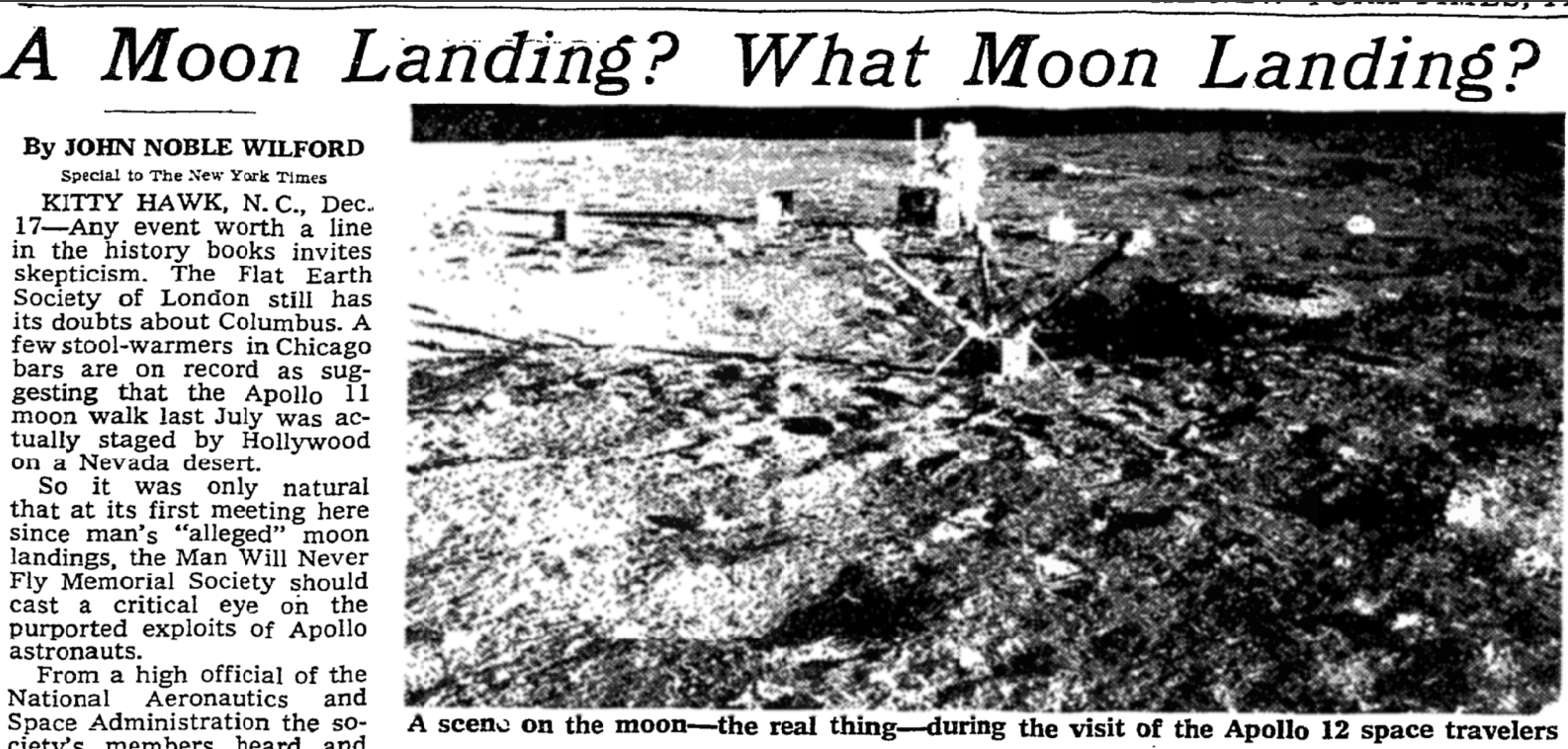

Inaccuracies, irresponsible coverage, and conflicts of interest in The New Yorker

Letter to the editor of the New Yorker on the irresponsible description of a suicide, undisclosed financial conflicts of interest, and omission of relevant medical research and historical context in their recent long covid article

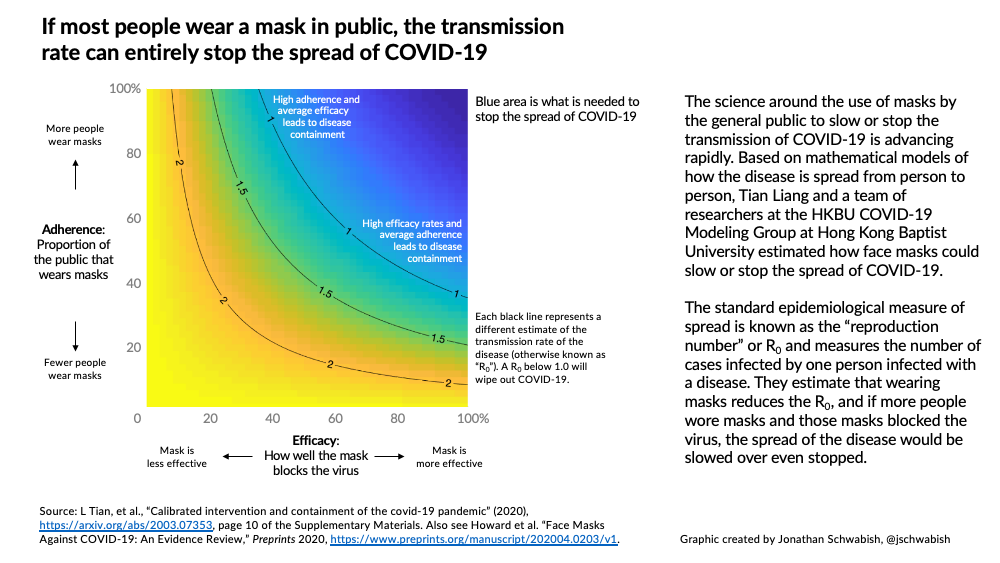

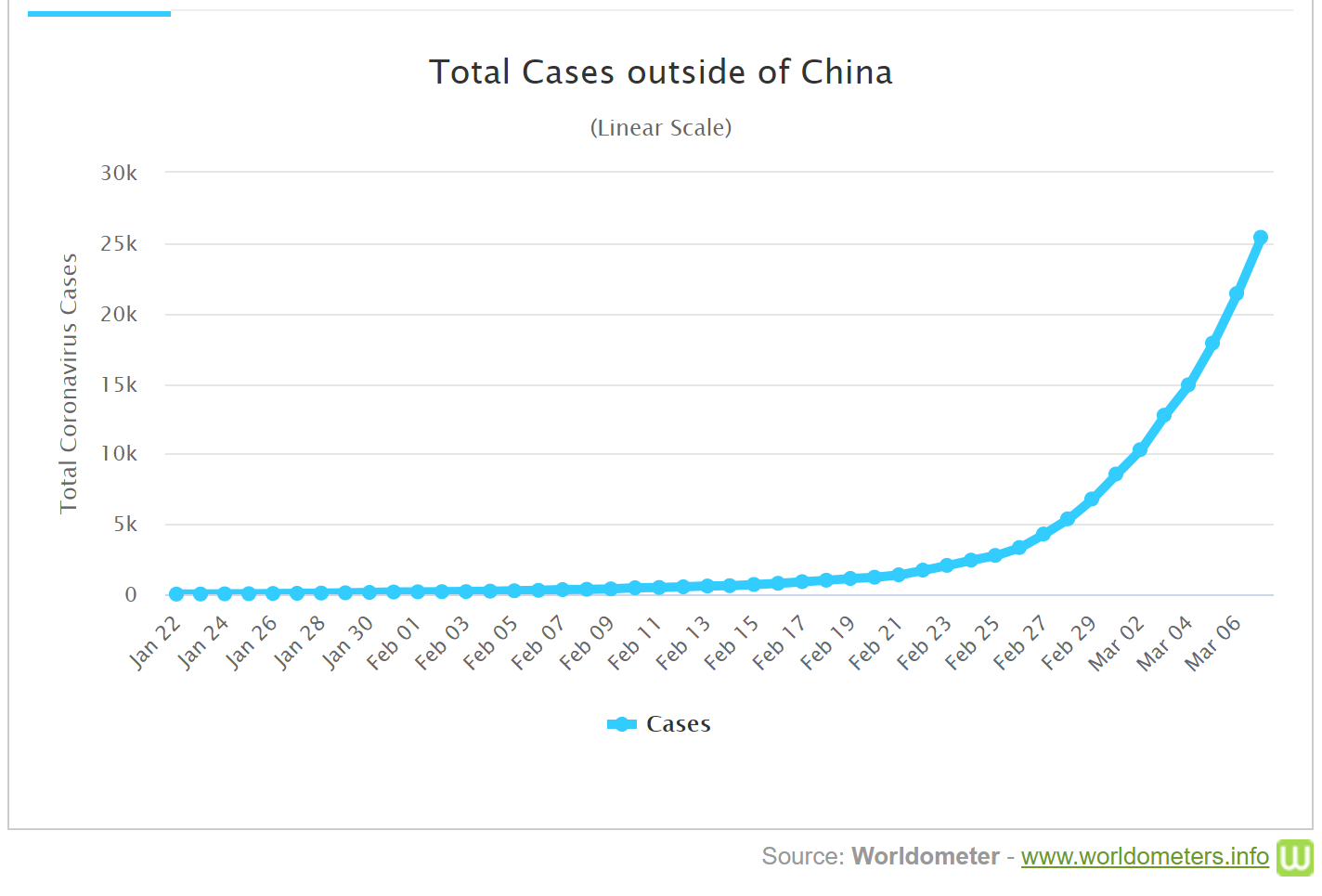

Australia can, and must, get R under 1.0

By using better masks, monitoring and improving indoor air quality, and rolling out rapid tests, we could quickly halt the current outbreaks in the Australian states of New South Wales (NSW) and Victoria. If we fail to do so, and open up before 80% of all Australians are vaccinated, we may have tens of thousands of deaths, and hundreds of thousands of children with chronic illness which could last for years.

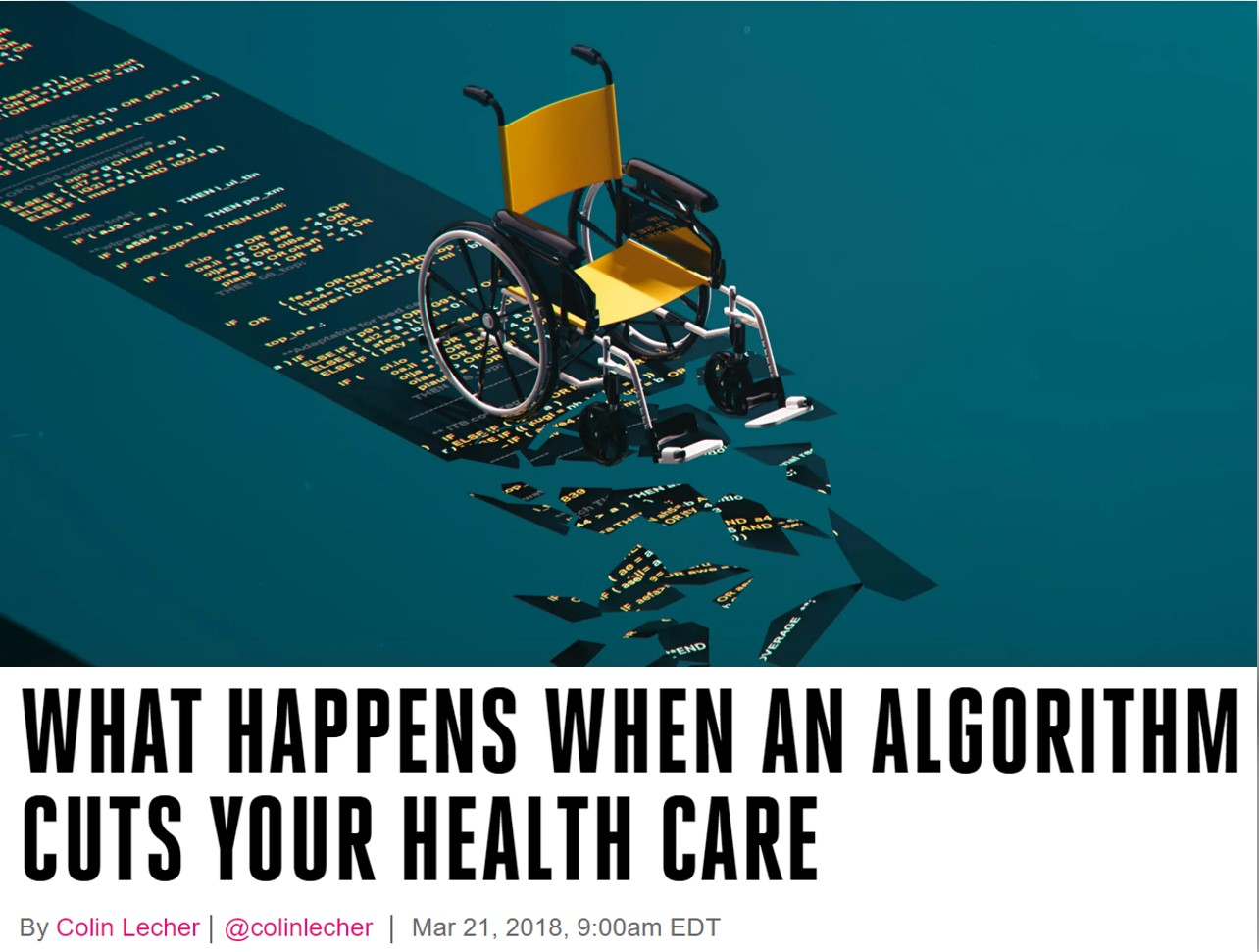

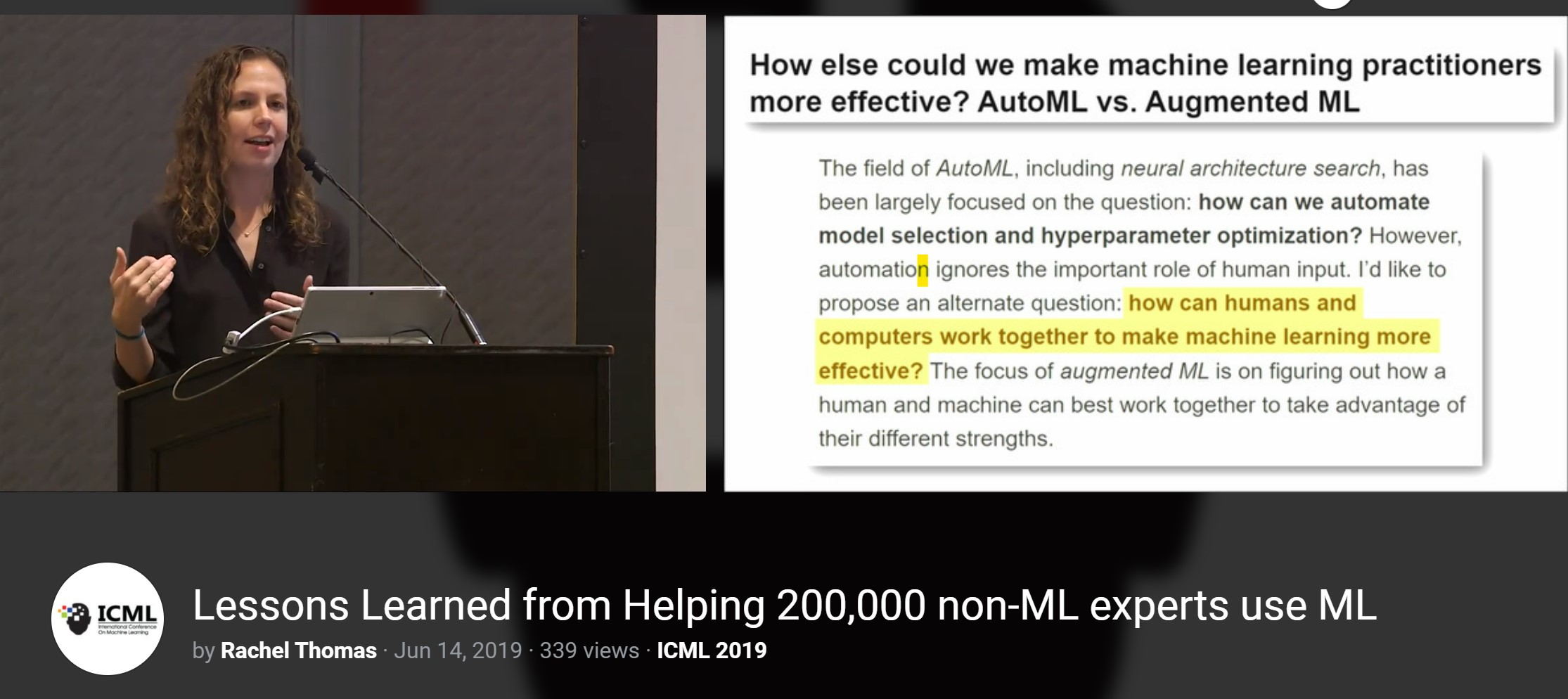

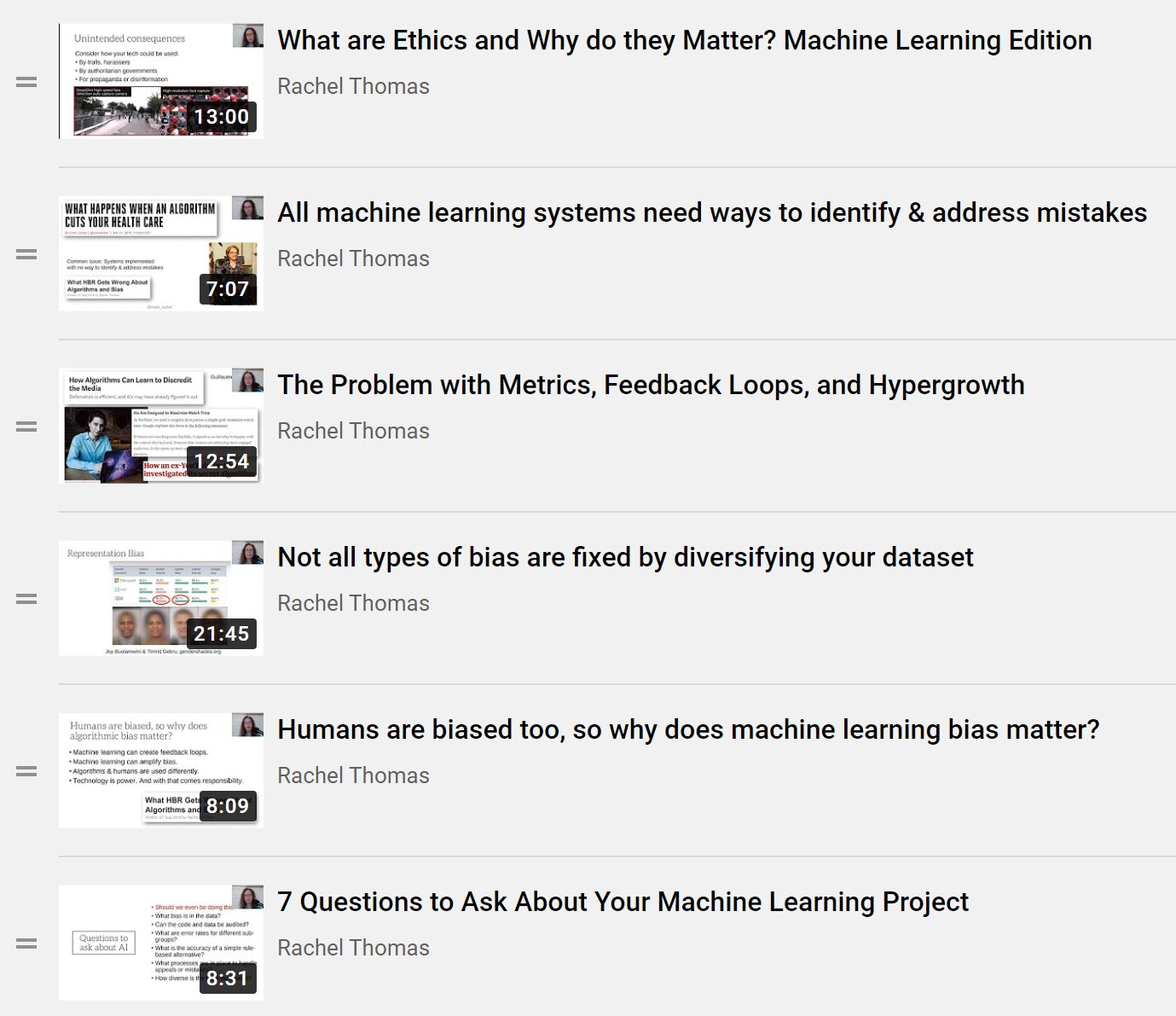

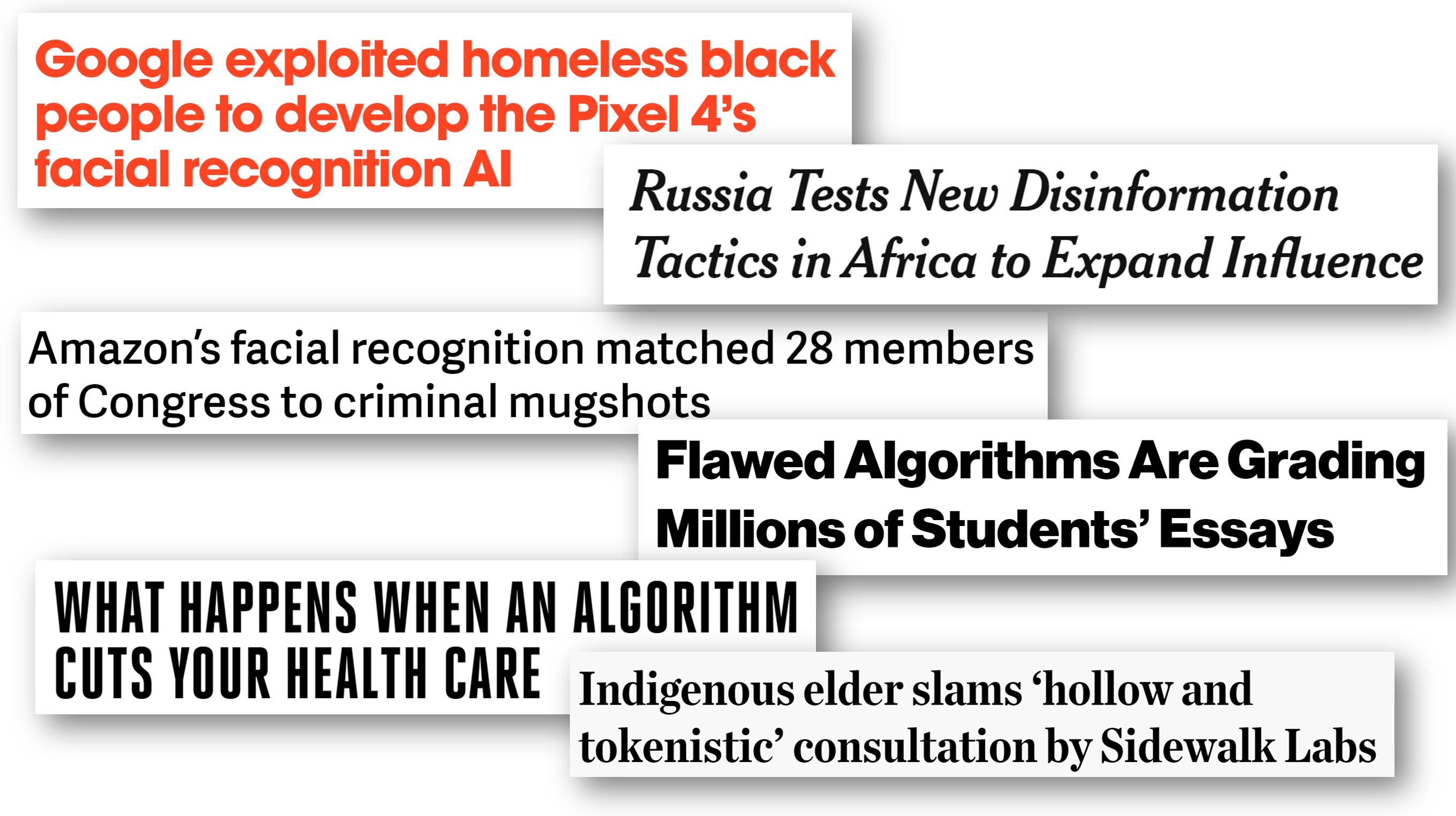

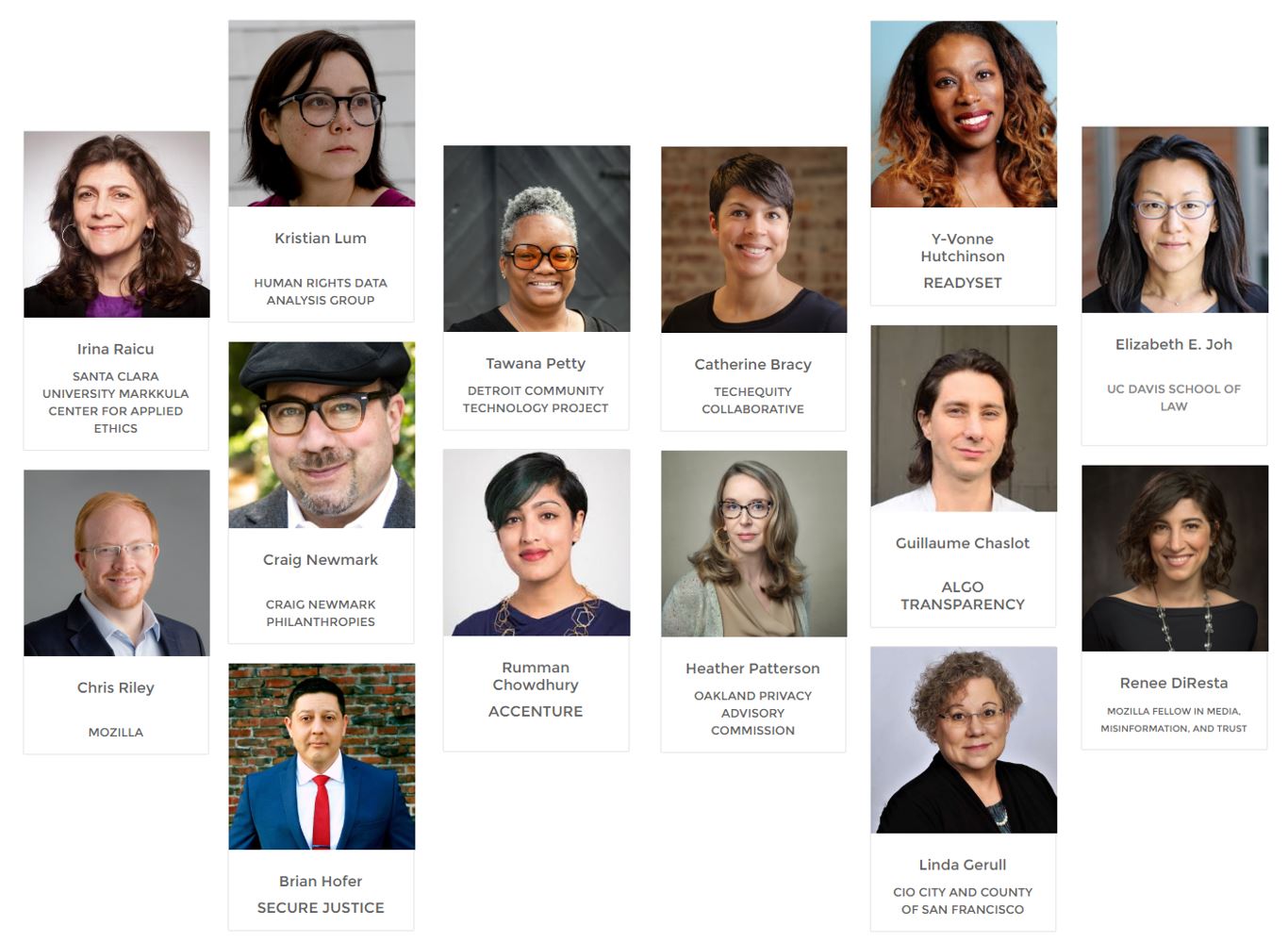

Applied Data Ethics, a new free course, is essential for all working in tech

Free, online course from fast.ai and University of San Francisco Data Institute covering disinformation, bias & fairness, ethical foundations, practical tools, privacy & surveillance, the silicon valley ecosystem, and algorithmic colonialism.

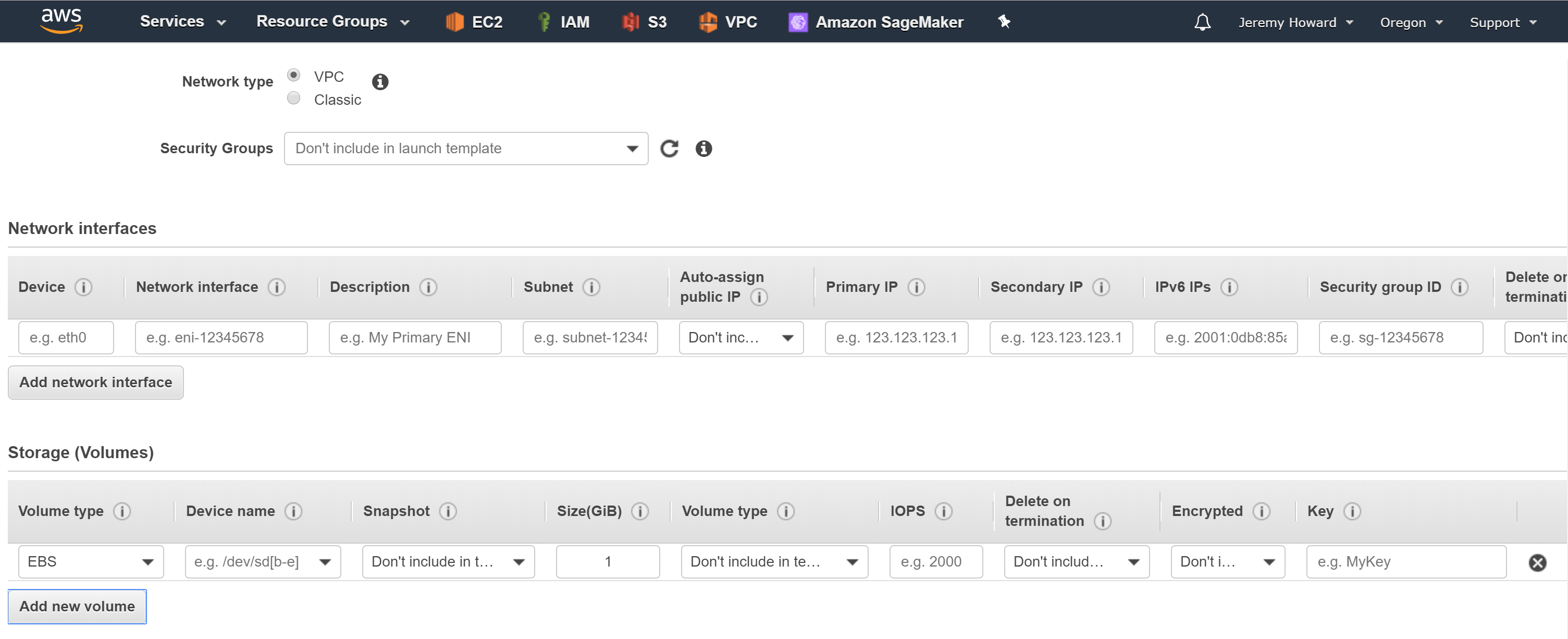

4 Principles for Responsible Government Use of Technology

As governments consider new uses of technology, in public places, this raises issues around surveillance of vulnerable populations, unintended consequences, and potential misuse. There are several principles to keep in mind in how these decisions can be made in a healthier and more responsible manner.